DOI: https://doi.org/10.1007/s00784-024-05566-w

PMID: https://pubmed.ncbi.nlm.nih.gov/38411726

تاريخ النشر: 2024-02-27

وجهة نظر جديدة تعتمد على التعلم العميق لرقم الأسنان واكتشاف التسوس

© المؤلف(ون) 2024

الملخص

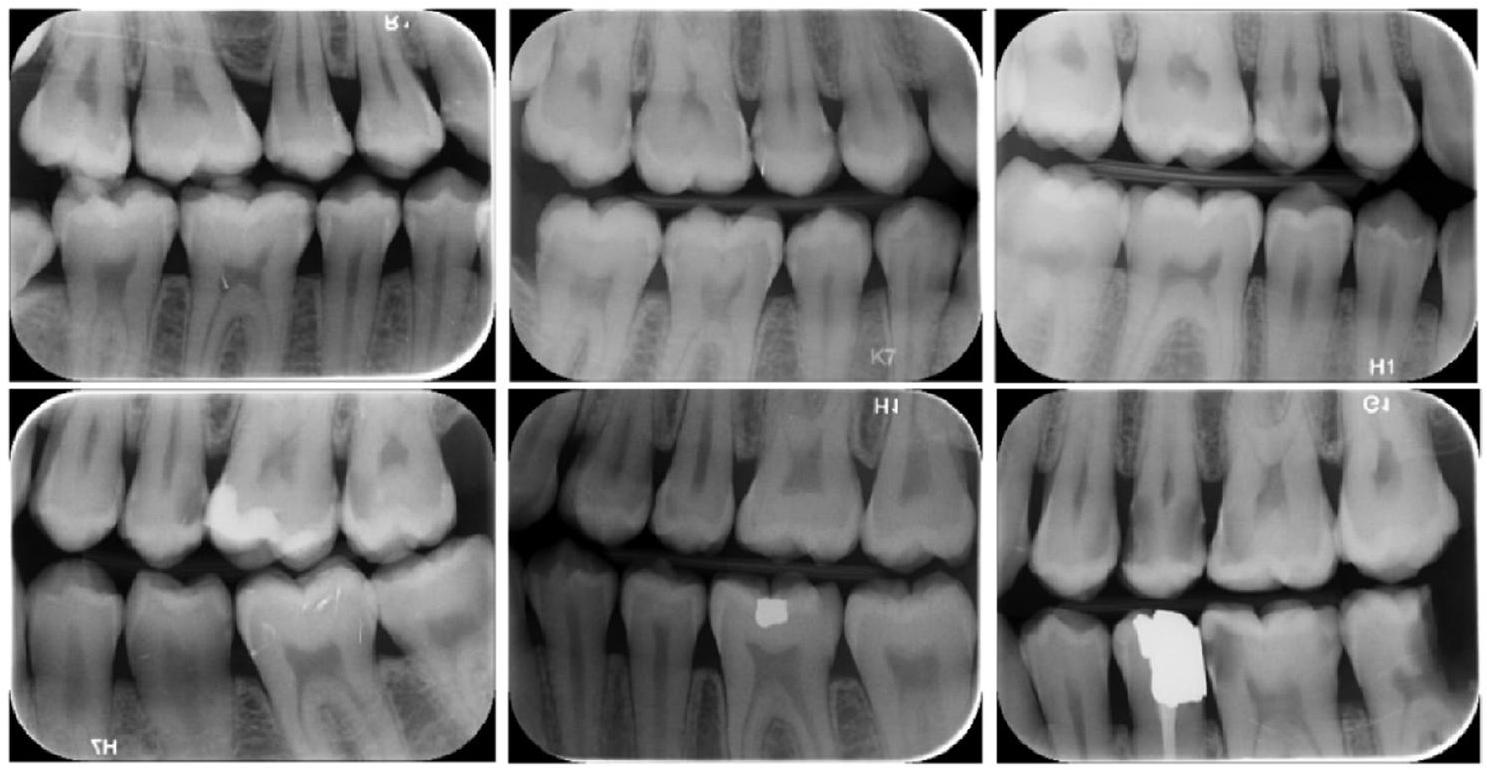

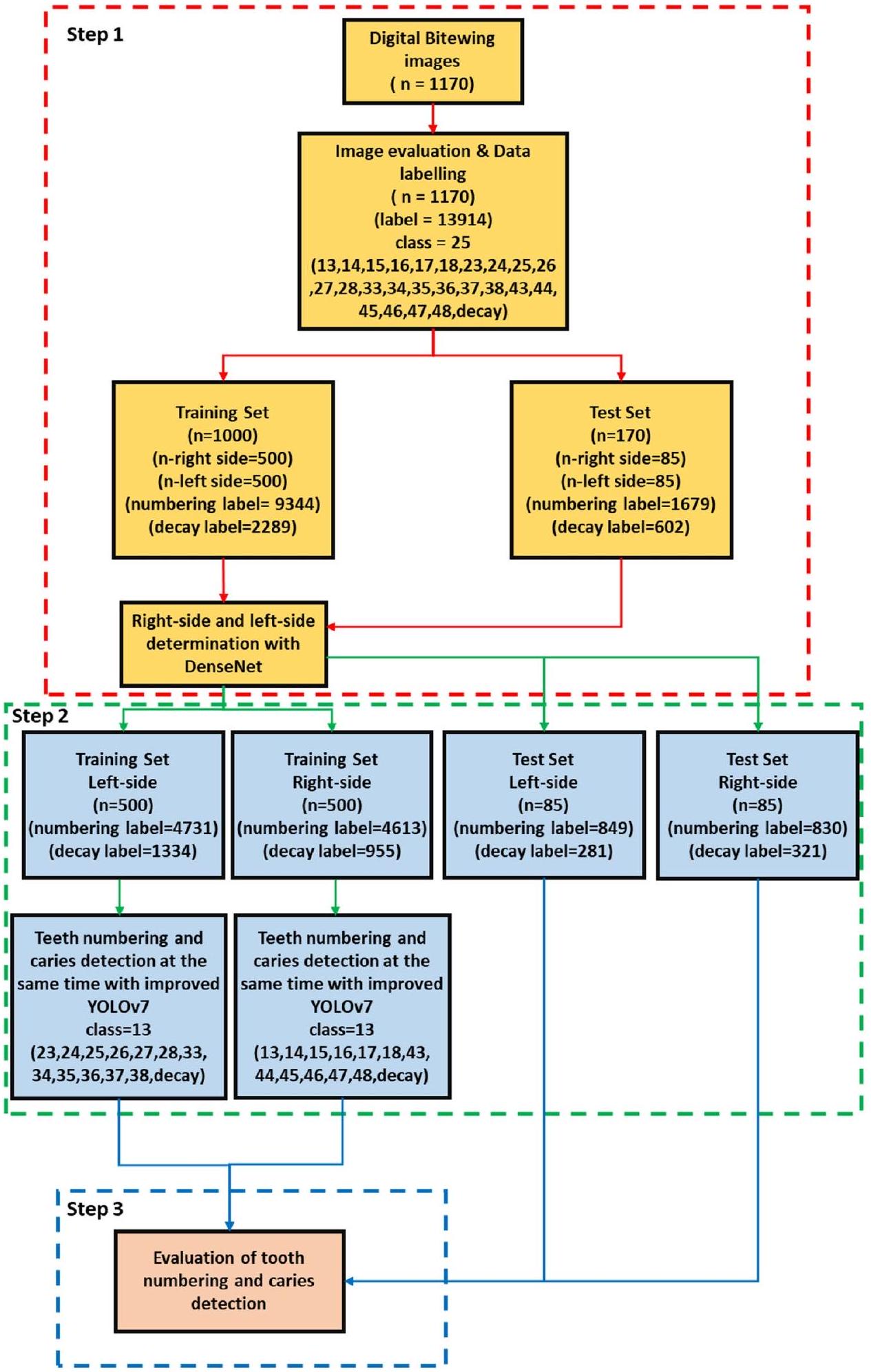

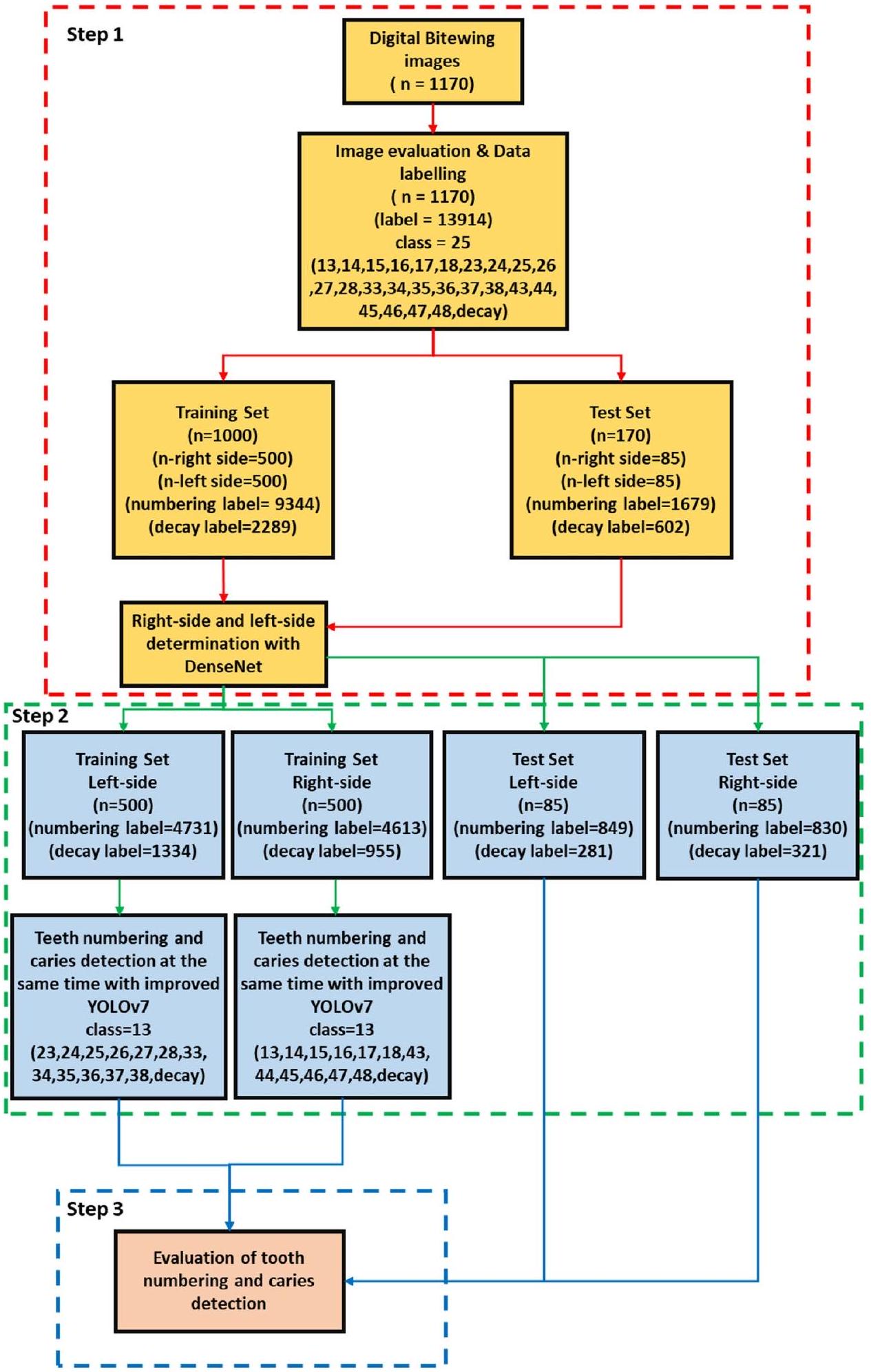

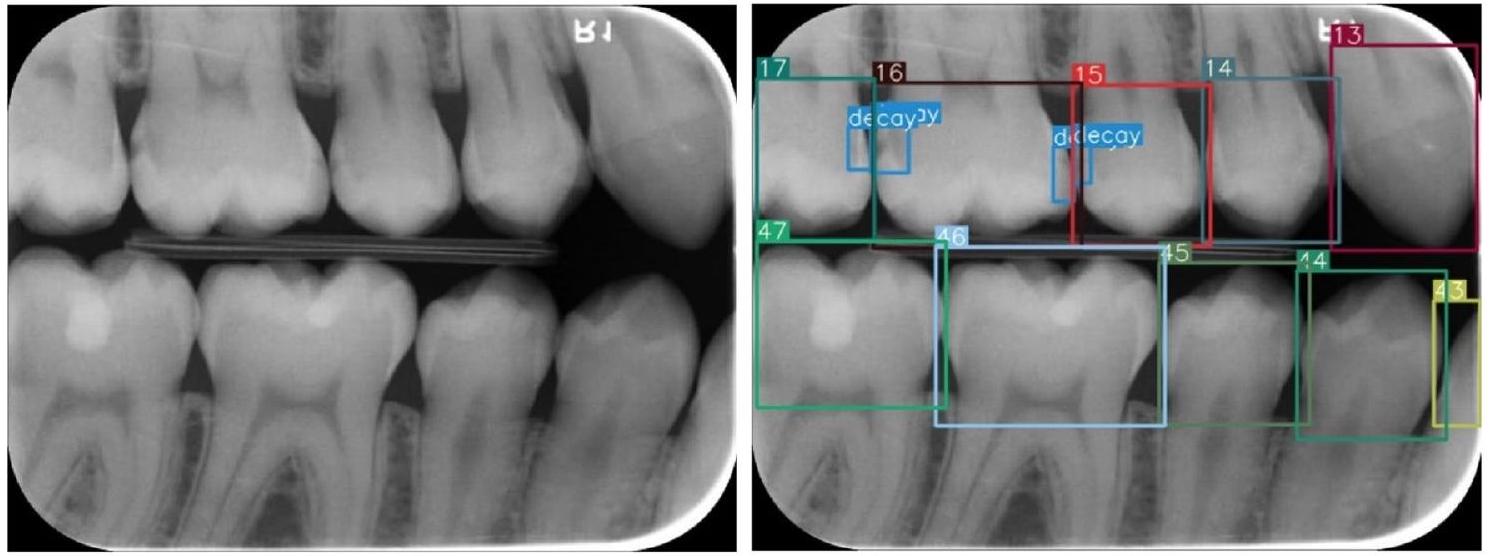

الأهداف كان الهدف من هذه الدراسة هو الكشف التلقائي عن الأسنان وترقيمها في صور الأشعة الرقمية للعضة الجانبية المأخوذة من المرضى، وتقييم الكفاءة التشخيصية للأسنان المتسوسة في الوقت الحقيقي، باستخدام خوارزميات التعلم العميق. الطرق تتكون مجموعة البيانات من 1170 صورة أشعة رقمية للعضة الجانبية تم الحصول عليها عشوائيًا من أرشيفات الكلية. بعد عملية تقييم الصور ووضع العلامات، تم تقسيم مجموعة البيانات إلى مجموعات تدريب واختبار. اقترحت هذه الدراسة بنية أنبوبية شاملة تتكون من ثلاث مراحل لمطابقة أرقام الأسنان وآفات التسوس لتعزيز نتائج العلاج ومنع المشكلات المحتملة. في البداية، تم استخدام شبكة عصبية تلافيفية مسبقة التدريب (CNN) لتحديد جانب صور العض. ثم، تم اقتراح نموذج CNN المحسن YOLOv7 لترقيم الأسنان وكشف التسوس. في المرحلة النهائية، قامت الخوارزمية التي طورناها بتقييم الأسنان التي تحتوي على تسوس من خلال مقارنة الأسنان المرقمة مع التسوس المكتشف، باستخدام قيمة التقاطع على الاتحاد لعملية المطابقة. النتائج وفقًا لنتائج الاختبار، كانت قيم الاسترجاع والدقة ودرجة F1 هي

مقدمة

الطريقة التي تعتبر غير كافية في تشخيص الكشف المبكر عن تسوس الأسنان الجانبي هي واحدة من الأساسيات [5، 6]. بالإضافة إلى طريقة الفحص البصري واللمسي، يلعب الفحص الإشعاعي، الذي يُستخدم عادة في التصوير داخل الفم، دورًا حاسمًا في ممارسة طب الأسنان. في هذا الصدد، يتم استخدام الأشعة السينية البانورامية، والأشعة السينية القمية، وأشعة البايتوينغ بانتظام في الممارسة. تعتبر رؤية وتقييم الحالة السنية على الأشعة السينية واحدة من أهم الخطوات في تشخيص الأمراض [7، 8]. اليوم، بفضل الأشعة السينية الرقمية للبايتوينغ، التي تتمتع بقدرة عالية على الاسترجاع والخصوصية في الكشف عن تسوس الأسنان الجانبي، يتم الكشف عن آفات التسوس بسهولة وبتكلفة منخفضة ونجاح مقارنة بالعديد من الطرق الأخرى [9، 10].

ممارسة الطبيب [35]. إلى أفضل معرفتنا، لم يتم التحقيق في أي دراسة تتعلق بالكشف عن الأسنان، والترقيم، وكشف التسوس في نفس الوقت في الأشعة السينية الرقمية باستخدام نهج الشبكات العصبية التلافيفية المعتمد على التعلم العميق. تجعل الطريقة المقترحة هذه الدراسة فريدة مقارنة بالأدبيات الموجودة.

المواد والطرق

تحديد حجم العينة

مجموعة الصور

| تدريب | اختبار | |

| تدهور | 2289 | 602 |

| ترقيم | 9344 | 1679 |

| إجمالي الأسطح | 16,674 | 2842 |

| انتشار الآفات | 24% | 21.16% |

| إجمالي الصورة | 1000 | 170 |

تصميم الدراسة

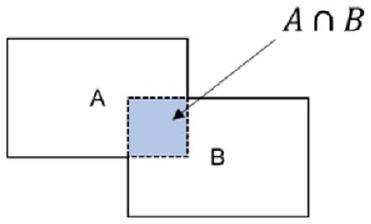

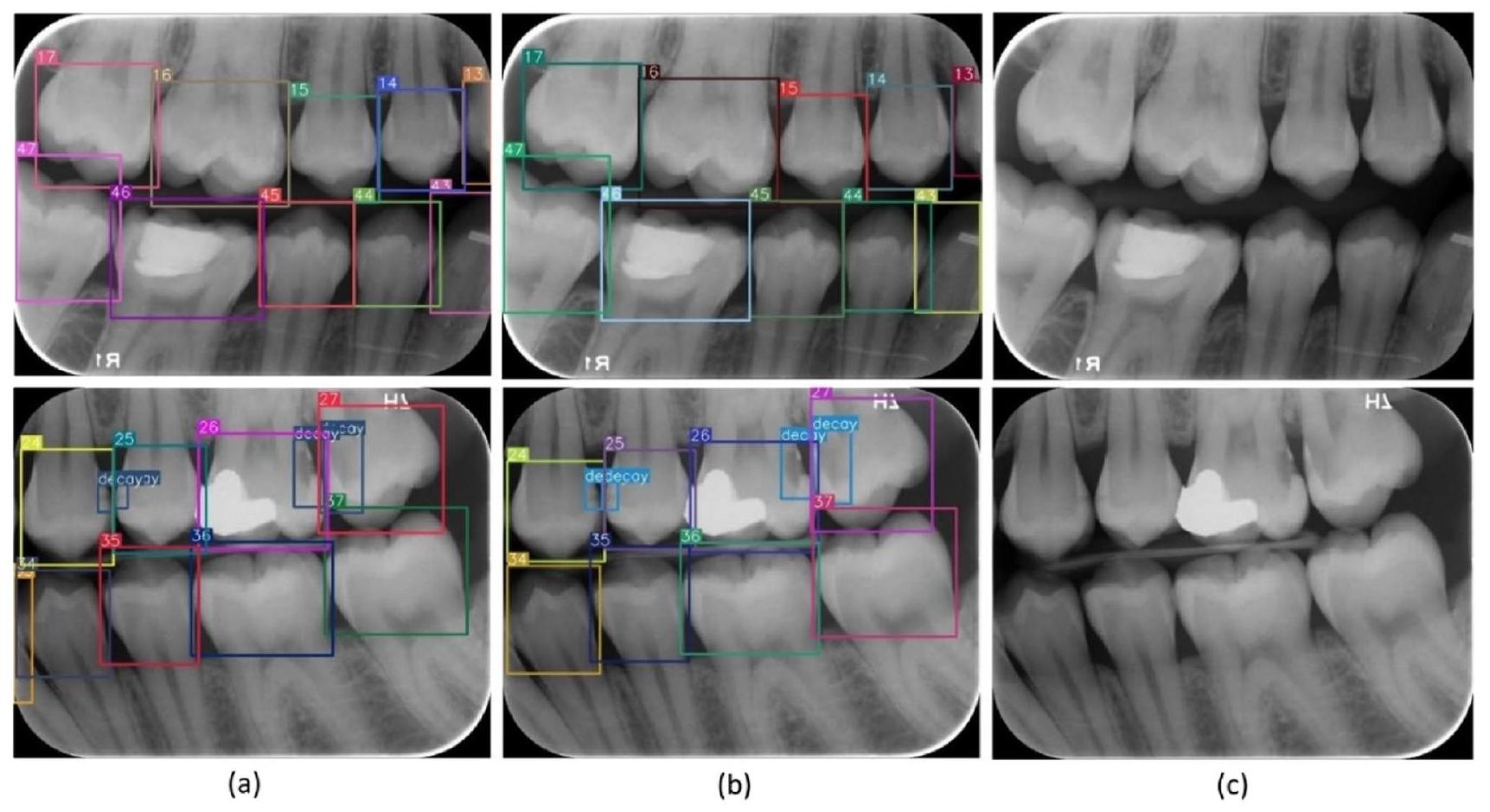

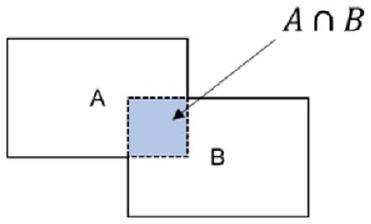

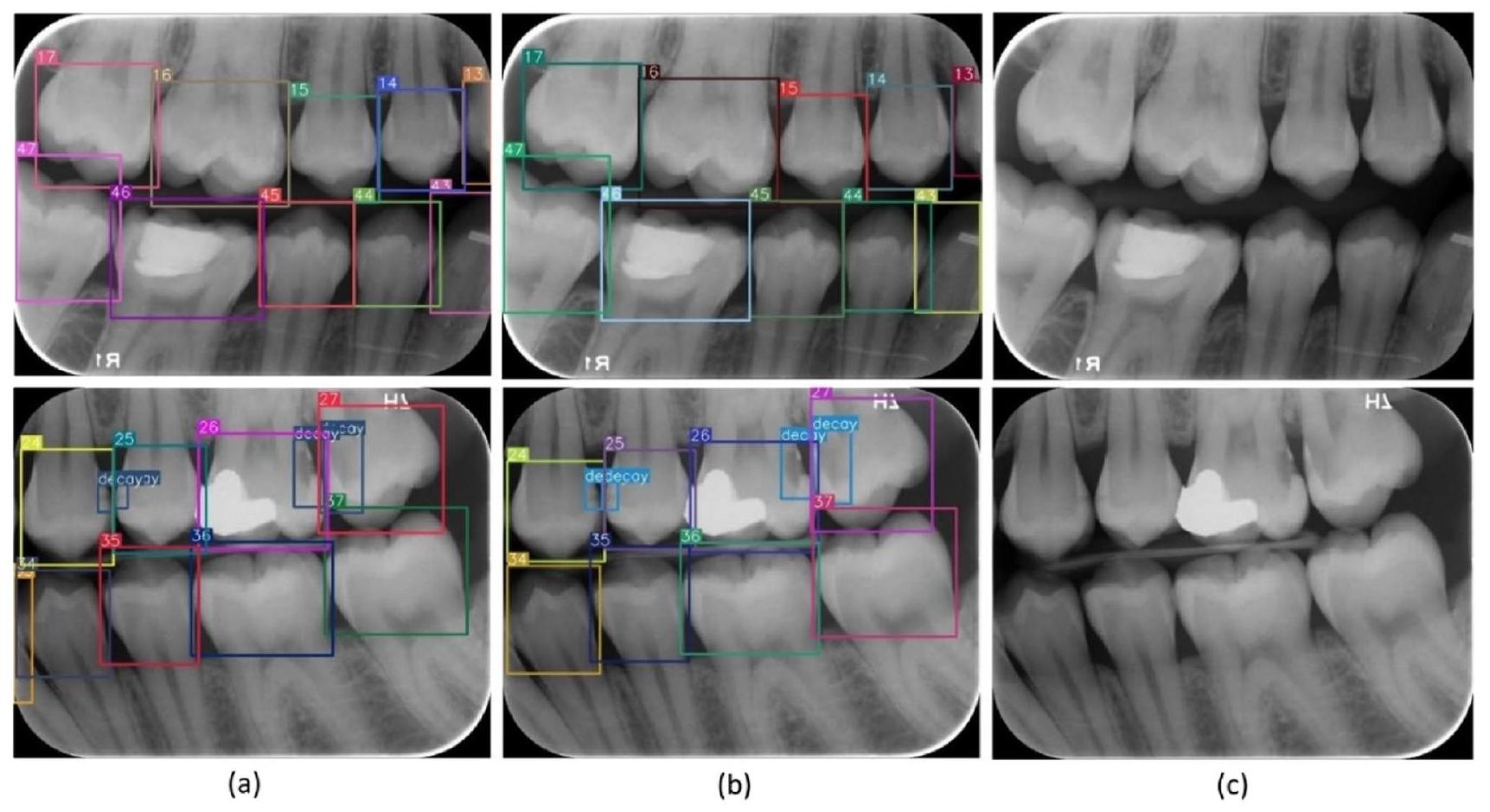

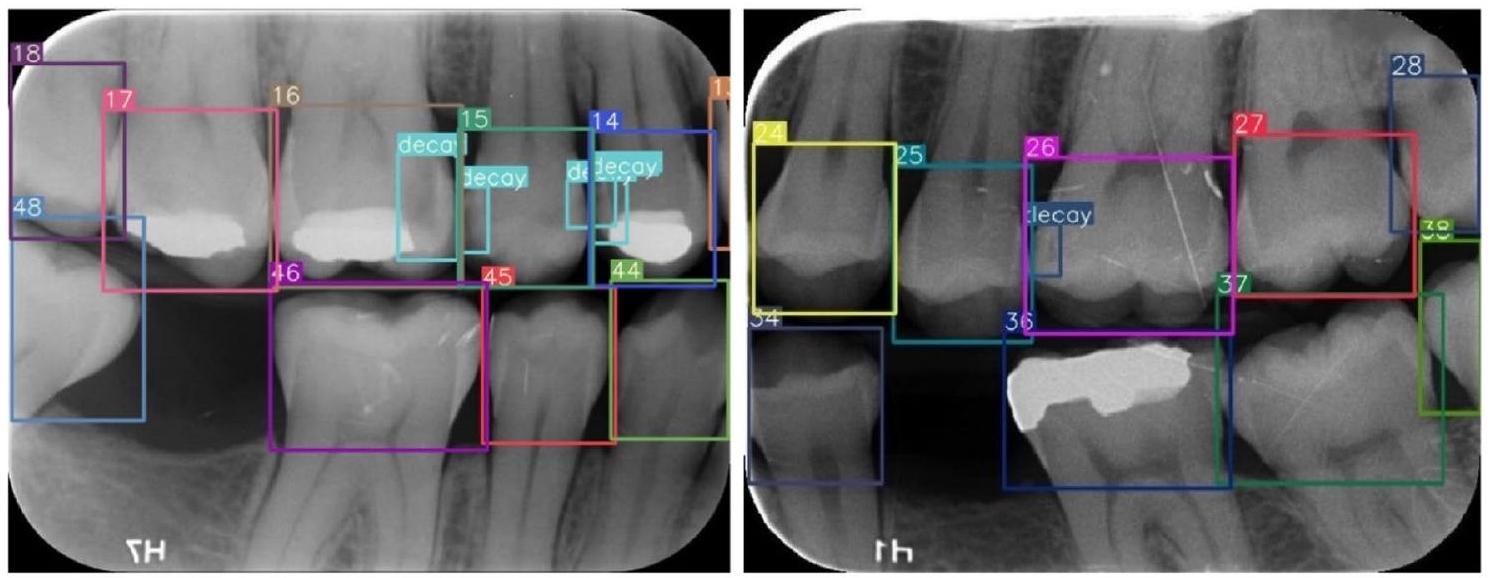

تقييم الصورة ووضع العلامات على البيانات

لنظام ترقيم الأسنان التابع للاتحاد الدولي لطب الأسنان (FDI) (16-17-18-26-27-28-36-37-38-46-47-48 (الأضراس)، 14-15-24-25-34-35-44-45 (الأنياب) و 13-23-33-43 (الأنياب)). أيضًا، تم وضع علامات على آفات التسوس كـ تدهور في الصور الشعاعية الرقمية. بينما في إجراء وضع العلامات على البيانات,

راجع المعلقون الصور الشعاعية في نفس الوقت ونتيجة للاتفاق، تمت معالجة وضع العلامات (الشكل 3).

كشف جانب الصورة الشعاعية الرقمية عبر الشبكة العصبية التلافيفية

مع دالة تنشيط سيغمويد. تم تدريب النموذج المقترح لمدة 30 دورة مع حجم دفعة 32، ومعدل تعلم أولي 0.001. تم استخدام الانتروبيا المتقاطعة الثنائية كدالة خطأ وتم تفضيل خوارزمية آدم كأداة تحسين.

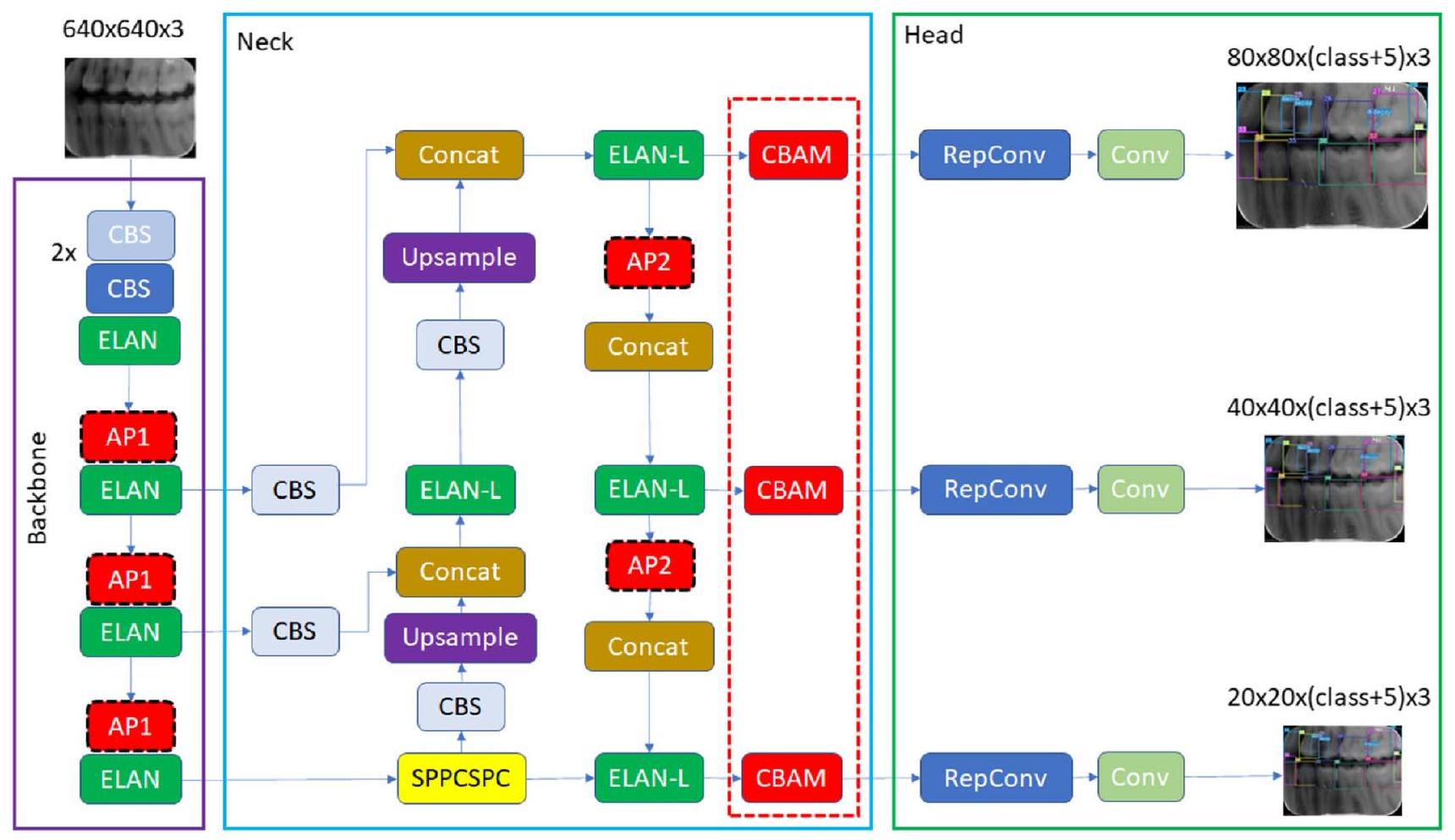

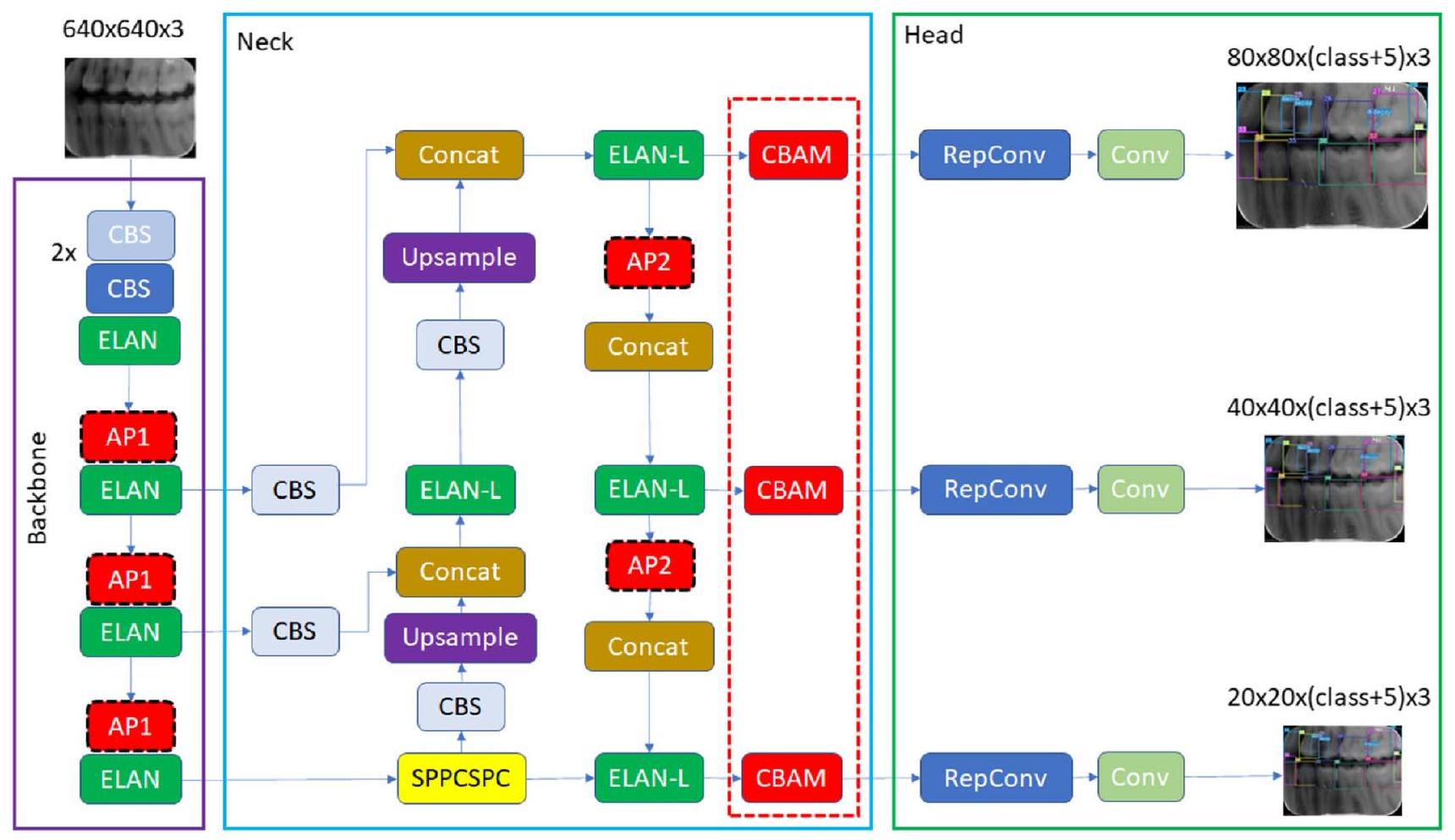

تحسين بنية YOLOv7، ترقيم الأسنان واكتشاف التسوس

بنية YOLOv7 الأصلية

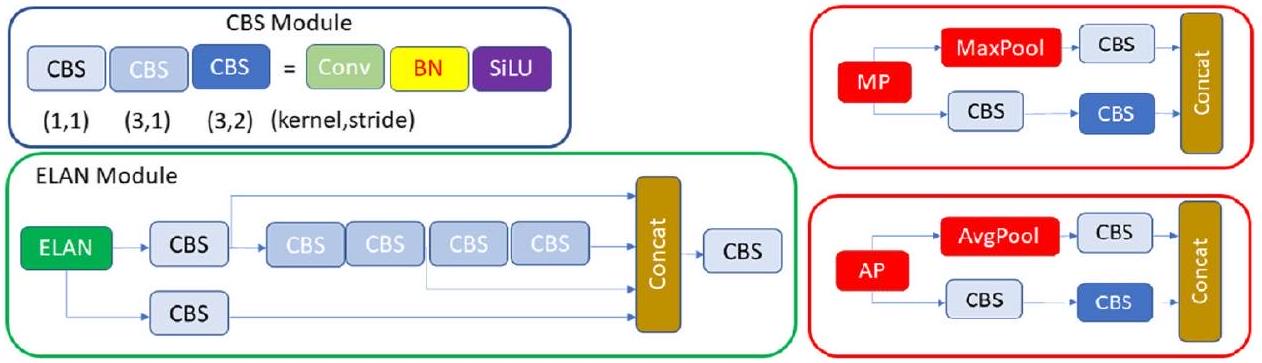

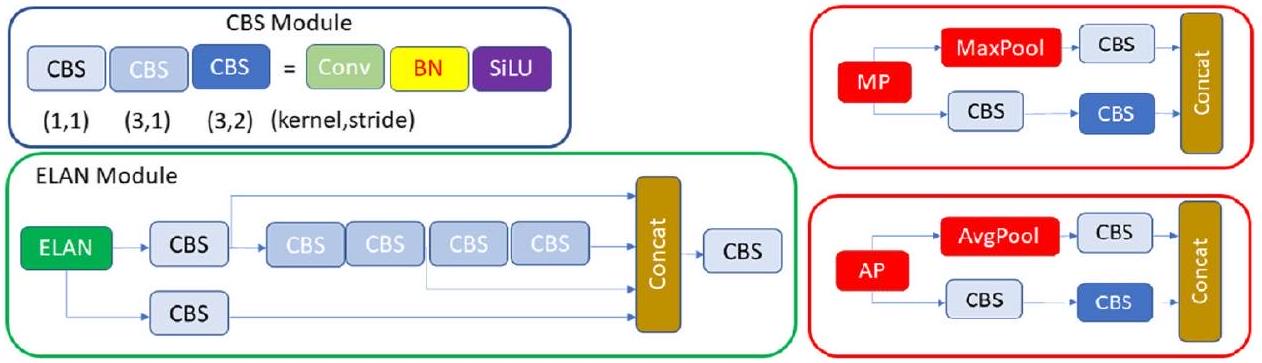

وفرع سفلي ويتكون من وحدات CBS وطبقة التجميع الأقصى (MaxPool) (الشكل 4). في هذه الوحدة، يستخدم الفرع العلوي MaxPool لالتقاط معلومات القيمة القصوى من مناطق محلية صغيرة، بينما تستخرج وحدات CBS في الفرع السفلي جميع المعلومات القيمة من مناطق محلية صغيرة. تعزز هذه الطريقة المزدوجة قدرة الشبكة على استخراج الميزات.

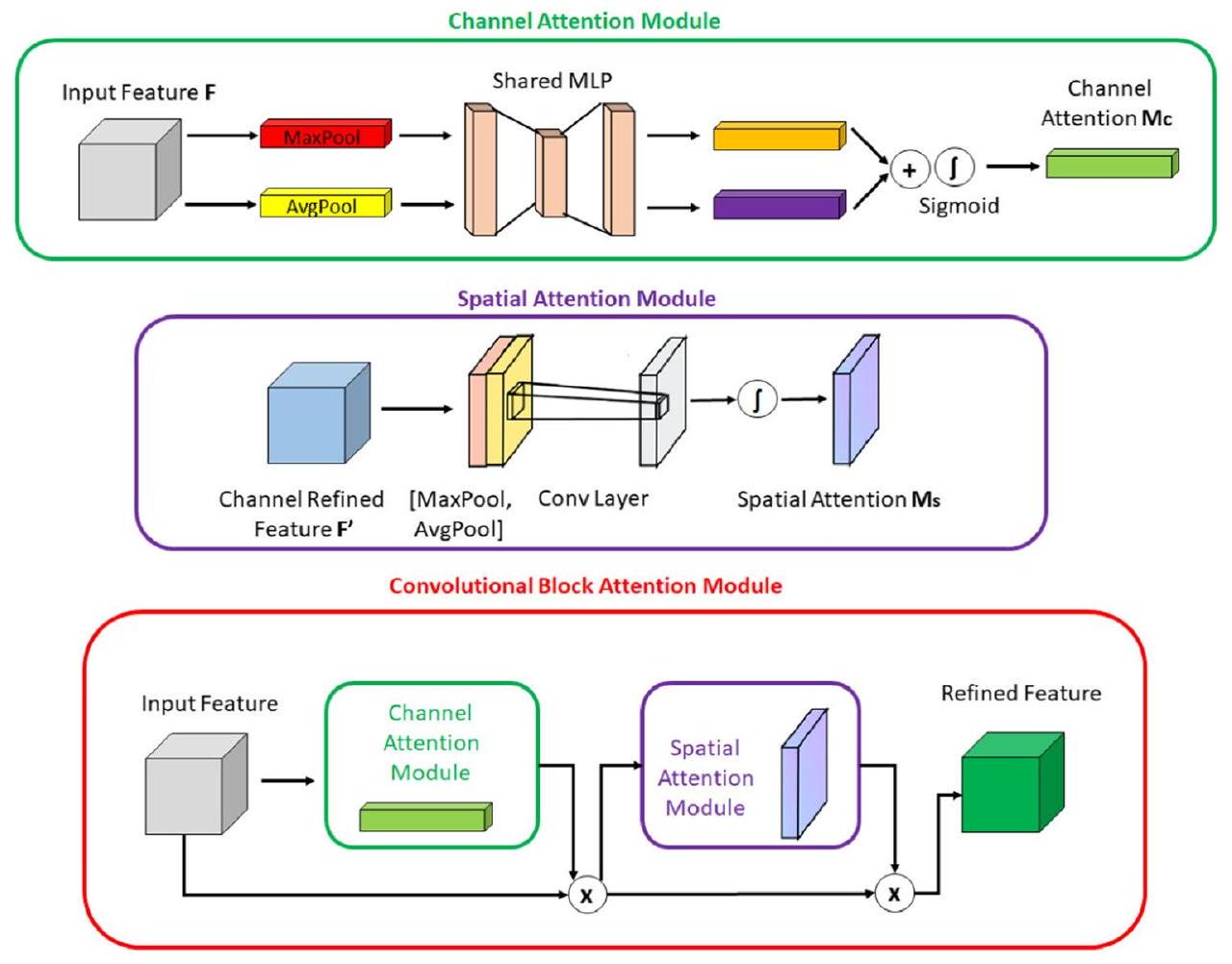

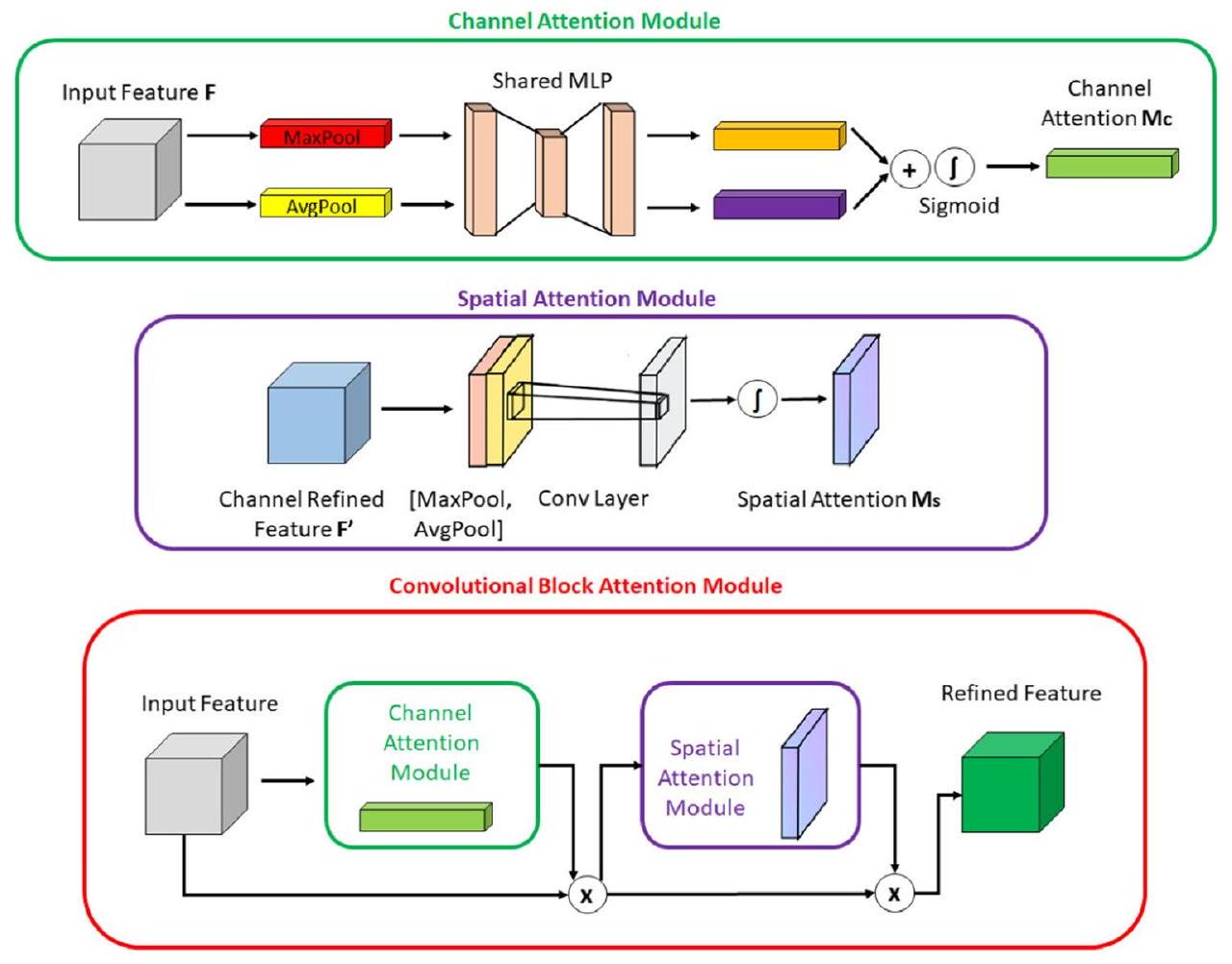

تحسين YOLOv7 مع وحدة انتباه الكتلة التلافيفية

بالكائن داخل الصورة، مما يمكّن من انتباه القناة بدقة أكبر. يتم توجيه هذه الوصفات السياقية المولدة إلى شبكة متعددة الطبقات مشتركة. بعد جمع المتجه الناتج من الشبكة متعددة الطبقات، يتم إنشاء خريطة انتباه القناة من خلال تطبيق دالة السيغمويد. داخل وحدة CBAM، تستهدف وحدة انتباه المكان (SAM) بشكل خاص تحديد مواقع المناطق الأكثر معلوماتية داخل صورة الإدخال. تتضمن حسابات انتباه المكان داخل وحدة SAM تطبيق تقنيات التجميع المتوسط والتجميع الأقصى على طول محور القناة للصورة المدخلة. يتم إخضاع الصورة ذات القناتين الناتجة لعمليات تلافيفية لتحويلها إلى خريطة ميزات ذات قناة واحدة، والتي يتم دمجها وإرسالها إلى طبقة تلافيفية قياسية لإنشاء وصف ميزات فعال. يتم تمرير الناتج من الطبقة التلافيفية عبر طبقة تنشيط سيغمويد. تقوم دالة السيغمويد، التي تعتمد على الاحتمالية، بتحويل جميع القيم إلى نطاق بين 0 و1، مما يكشف عن خريطة انتباه المكان. باختصار، تجمع CBAM بين وحدتي الانتباه الموصوفتين أعلاه لتحديد ما هو مهم في صورة الإدخال وتحديد المناطق الوصفية داخل الصورة. يتم تقديم أشكال وحدات CBAM وCAM وSAM في الشكل 6.

تستخدم طريقة التجميع المتوسط بدلاً من التجميع الأقصى. يقوم التجميع الأقصى بإرجاع القيمة القصوى في كل نافذة، بينما يقوم التجميع المتوسط بإرجاع القيمة المتوسطة في كل نافذة. فائدة استخدام التجميع المتوسط بدلاً من التجميع الأقصى هي أنه يمكن أن يساعد في تقليل الإفراط في التكيف. من المرجح أن يقوم التجميع الأقصى بالتذكر لأنه يحتفظ فقط بأقوى ميزة في كل نافذة قد لا تمثل النافذة بالكامل. من ناحية أخرى، يأخذ التجميع المتوسط في الاعتبار جميع الميزات في كل نافذة، مما يمكن أن يساعد في الحفاظ على مزيد من المعلومات. بالإضافة إلى ذلك، يمكن أن يساعد التجميع المتوسط في تنعيم المخرجات، مما يمكن أن يكون مفيدًا عندما تكون المدخلات صاخبة أو متقلبة. في هذا السياق، التغييرات التي أجريناها موضحة في الشكل 7. لقد لوحظ أن هذا التحسين له تأثير إيجابي على أداء الكشف.

تقييم ترقيم الأسنان ومطابقة الكشف عن التسوس

الإعداد التجريبي

تحليل تقييم الأداء

Adaptation of the confusion matrix for evaluating performance of

teeth numbering and caries detection matching

TP: the output in which the model correctly predicts the positive

class (teeth correctly detected, correctly numbered, caries correctly

detected on bitewing radiographs)

FP: the output in which the model incorrectly predicts the positive

class (teeth correctly detected, correctly numbered, but caries pre-

diction on sound surface; teeth correctly detected, but regardless of

caries incorrectly numbered)

FN: the output in which the model incorrectly predicts the negative

class (teeth correctly detected, correctly numbered, but no caries

prediction on decayed surface)

TN: the output in which the model correctly predicts the negative

class (teeth correctly detected, correctly numbered, no caries pre-

diction when there was no caries)

النتائج

تجارب الإزالة

| الاسترجاع | الدقة | درجة F1 | |

| الجانب الأيسر | 0.995 | 0.987 | 0.99 |

| الجانب الأيمن | 0.993 | 0.988 | 0.99 |

| الكل | 0.994 | 0.987 | 0.99 |

| الاسترجاع | الدقة | درجة F1 | |

| ترقيم الأسنان في الجانب الأيسر | 0.976 | 0.978 | 0.977 |

| ترقيم الأسنان في الجانب الأيمن | 0.972 | 0.993 | 0.982 |

| كشف التسوس في الجانب الأيسر | 0.857 | 0.833 | 0.844 |

| كشف التسوس في الجانب الأيمن | 0.810 | 0.900 | 0.800 |

| ترقيم وكشف التسوس في الجانب الأيسر | 0.966 | 0.966 | 0.966 |

| ترقيم وكشف التسوس في الجانب الأيمن | 0.959 | 0.985 | 0.968 |

| ترقيم وكشف التسوس في الجانبين الأيسر والأيمن – الكل | 0.962 | 0.975 | 0.967 |

| ترقيم الأسنان – الكل | 0.974 | 0.985 | 0.979 |

| كشف التسوس – الكل | 0.833 | 0.866 | 0.849 |

| TP | FP | FN | TN | دقة | استدعاء | خصوصية | دقة | درجة F1 | |

| الجانب الأيسر | ٢٤٠ | ٤٨ | ٤٧ | ١١٠٢ | 0.933 | 0.836 | 0.958 | 0.833 | 0.834 |

| الجانب الأيمن | ٢٦٠ | 30 | 66 | 1049 | 0.931 | 0.797 | 0.972 | 0.896 | 0.843 |

| كل | ٥٠٠ | 78 | 113 | 2151 | 0.932 | 0.815 | 0.965 | 0.865 | 0.839 |

معلمات 103.8 GFLOPS وحجم 75.2 ميجابايت في الذاكرة. تم تحقيق زيادة مرضية في الأداء مع زيادة صغيرة في التعقيد في النموذج.

| استدعاء | دقة | درجة F1 | ||||

| YOLOv7-MP الأصلي بدون تقسيم إلى اليمين واليسار | تحلل الكل | 0.676 | تحلل الكل | 0.707 | تحلل الكل | 0.691 |

| ترقيم الكل | 0.٤٠٩ | ترقيم الكل | 0.236 | ترقيم الكل | 0.299 | |

| YOLOv7-MP الأصلي مع تقسيم إلى اليمين واليسار | تآكل الجانب الأيمن | 0.659 | تآكل الجانب الأيمن | 0.803 | ||

| تسوس الجانب الأيسر | 0.696 | تسوس الجانب الأيسر | 0.848 | |||

| تآكل الكل | 0.677 | تحلل الكل | 0.825 | تحلل الكل | 0.743 | |

| ترقيم الجانب الأيمن | 0.971 | ترقيم الجانب الأيمن | 0.974 | |||

| ترقيم الجانب الأيسر | 0.979 | ترقيم الجانب الأيسر | 0.978 | |||

| ترقيم الكل | 0.975 | ترقيم الكل | 0.976 | ترقيم الكل | 0.975 | |

| YOLOv7-AP | تآكل الجانب الأيمن | 0.626 | تآكل الجانب الأيمن | 0.773 | ||

| تآكل الجانب الأيسر | 0.797 | تسوس الجانب الأيسر | 0.775 | |||

| تحلل الكل | 0.711 | تحلل الكل | 0.774 | تحلل الكل | 0.741 | |

| ترقيم الجانب الأيمن | 0.967 | ترقيم الجانب الأيمن | 0.977 | |||

| ترقيم الجانب الأيسر | 0.981 | ترقيم الجانب الأيسر | 0.983 | |||

| ترقيم الكل | 0.974 | ترقيم الكل | 0.980 | ترقيم الكل | 0.976 | |

| YOLOv7-MP-CBAM | تآكل الجانب الأيمن | 0.579 | تآكل الجانب الأيمن | 0.838 | ||

| تسوس الجانب الأيسر | 0.754 | تآكل الجانب الأيسر | 0.832 | |||

| تحلل الكل | 0.666 | تحلل الكل | 0.836 | تحلل الكل | 0.741 | |

| ترقيم الجانب الأيمن | 0.974 | ترقيم الجانب الأيمن | 0.983 | |||

| ترقيم الجانب الأيسر | 0.981 | ترقيم الجانب الأيسر | 0.978 | |||

| ترقيم الكل | 0.977 | ترقيم الكل | 0.980 | ترقيم الكل | 0.978 | |

| YOLOv7-AP-CBAM (خاص بنا) | تآكل الجانب الأيمن | 0.810 | تآكل الجانب الأيمن | 0.900 | كل تدهور | 0.849 |

| تدهور الجانب الأيسر | 0.857 | تآكل الجانب الأيسر | 0.833 | |||

| كل تدهور | 0.833 | كل تدهور | 0.866 | |||

| ترقيم الجانب الأيمن | 0.972 | ترقيم الجانب الأيمن | 0.993 | |||

| ترقيم الجانب الأيسر | 0.976 | ترقيم الجانب الأيسر | 0.978 | |||

| ترقيم الكل | 0.974 | ترقيم الكل | 0.985 | ترقيم الكل | 0.979 |

نقاش

لإمكاناتها المتميزة في حل المشكلات. في هذا السياق، تم تطوير العديد من هياكل التعلم العميق، حيث تبرز الشبكات العصبية التلافيفية بشكل خاص لأدائها المذهل في مهام التعرف على الصور. من خلال استخدام نماذج الشبكات العصبية التلافيفية، يمكن للممارسين تعزيز كفاءة العمل بشكل فعال وتحقيق نتائج دقيقة في عملية التشخيص الدقيقة.

على الجانب الأيسر في مجموعة التدريب. لتجنب هذه المشكلة، يمكن إضافة صور جديدة للعضة تشمل تسوس الأسنان إلى مجموعة التدريب على الجانب الأيمن. انحياز مجموعة البيانات هو مشكلة أخرى تحدث عندما يتم تمثيل عينات معينة في مجموعة البيانات بشكل مفرط أو ناقص. لتجنب هذه المشكلة، تم توليد مجموعة البيانات باستخدام صور مأخوذة من نفس الأجهزة في عيادات مختلفة بدقة وأوقات تعرض مختلفة. ومع ذلك، قد تؤثر الصور المأخوذة من آلات الأشعة السينية المختلفة ومن أشخاص ذوي خصائص ديموغرافية مختلفة على النتائج. تم تحديد معلمات النموذج المقترح باستخدام بيانات التحقق خلال مرحلة التدريب، وتم تقييم أداء النموذج على بيانات غير مرئية باستخدام بيانات اختبار مختلفة عن بيانات التدريب والتحقق. تتطلب نماذج الشبكات العصبية التلافيفية متطلبات عتادية عالية، خاصة في مرحلة التدريب. في دراستنا، قمنا بإجراء التدريب والاختبارات على بطاقة الرسوميات 1080 Ti. ستكون الأداء الحاسوبي أعلى على بطاقات الرسوميات الأكثر تقدمًا. تم الكشف عن آفات تسوس الأسنان التي لم يتم تقييمها باستخدام الطريقة القياسية الذهبية للتقييم النسيجي وتم تحديدها ووضع علامات عليها من قبل المعلقين على الصور الشعاعية. واجه النموذج تحديات في التنبؤ بدقة بالأسنان الموجودة في زوايا صورة العض، على عكس الأسنان الأخرى. قد يكون هذا مرتبطًا بانخفاض وضوح هذه الأسنان المحددة وكمية البيانات المحدودة في مجموعة التدريب. أيضًا، لم يتم مقارنة نموذج YOLOv7-AP-CBAM المحسن مع خبير. على الرغم من قيود الدراسة، من الجدير بالذكر أن هناك العديد من النقاط القوية المهمة أيضًا. قدمت الدراسة الحالية تحليلًا شاملاً من خلال دمج نتائج اكتشاف الأسنان، والترقيم، واكتشاف التسوس. سهل هذا النهج الشامل فهمًا أكثر تماسكًا للنتائج. بالإضافة إلى ذلك، شمل التصميم التجريبي تقسيم البيانات إلى الجانبين الأيمن والأيسر، مما أتاح تحليلًا أكثر تفصيلًا. عزز هذا النهج مقاييس أداء نتائجنا. علاوة على ذلك، من خلال اعتماد نهج متعدد التخصصات، قدمت دراستنا منظورًا جديدًا وتساهم بمساهمة مبتكرة في الأوراق السابقة.

الخاتمة

كشفت أن الشبكات العصبية التلافيفية يمكن أن تقدم دعمًا قيمًا للأطباء من خلال أتمتة اكتشاف وترقيم الأسنان، بالإضافة إلى اكتشاف التسوس في الأشعة السينية للعض. يمكن أن يسهم ذلك في التقييم، وتحسين الأداء العام، وفي النهاية توفير الوقت الثمين.

إ.أ: التصور، البرمجيات، التصوير، تنظيم البيانات، التحليل الرسمي، كتابة – المسودة الأصلية، كتابة – المراجعة والتحرير.

ي.ب: التصور، المنهجية، جمع البيانات وتوسيمها، الكتابة – المراجعة والتحرير.

الإعلانات

References

- Featherstone JDB (2000) The science and practice of caries prevention. J Am Dent Assoc 131:887-899. https://doi.org/10.14219/ jada.archive.2000.0307

- Mortensen D, Dannemand K, Twetman S, Keller MK (2014) Detection of non-cavitated occlusal caries with impedance spectroscopy and laser fluorescence: an in vitro study. Open Dent J 8:28-32. https://doi.org/10.2174/1874210601408010028

- Pitts NB (2004) Are we ready to move from operative to nonoperative/preventive treatment of dental caries in clinical practice? Caries Res 38:294-304. https://doi.org/10.1159/000077769

- Baelum V, Heidmann J, Nyvad B (2006) Dental caries paradigms in diagnosis and diagnostic research. Eur J Oral Sci 114:263-277. https://doi.org/10.1111/j.1600-0722.2006.00383.x

- Pitts NB, Stamm JW (2004) International consensus workshop on caries clinical trials (ICW-CCT)-final consensus statements: agreeing where the evidence leads. J Dent Res 83:125-128. https://doi.org/10.1177/154405910408301s27

- Selwitz RH, Ismail AI, Pitts NB (2007) Dental caries. The Lancet 369:51-59. https://doi.org/10.1016/S0140-6736(07)60031-2

- Chan M, Dadul T, Langlais R, Russell D, Ahmad M (2018) Accuracy of extraoral bite-wing radiography in detecting proximal caries and crestal bone loss. J Am Dent Assoc 149:51-58. https://doi. org/10.1016/j.adaj.2017.08.032

- Vandenberghe B, Jacobs R, Bosmans H (2010) Modern dental imaging: a review of the current technology and clinical applications in dental practice. Eur Radiol 20:2637-2655. https://doi.org/ 10.1007/s00330-010-1836-1

- Baelum V (2010) What is an appropriate caries diagnosis? Acta Odontol Scand 68:65-79. https://doi.org/10.3109/00016350903530786

- Kamburoğlu K, Kolsuz E, Murat S, Yüksel S, Özen T (2012) Proximal caries detection accuracy using intraoral bitewing radiography, extraoral bitewing radiography and panoramic radiography. Dentomaxillofacial Radiol 41:450-459. https:// doi.org/10.1259/dmfr/30526171

- Hung K, Montalvao C, Tanaka R, Kawai T, Bornstein MM (2020) The use and performance of artificial intelligence applications in dental and maxillofacial radiology: A systematic review. Dentomaxillofacial Radiol 49:20190107. https://doi. org/10.1259/dmfr. 20190107

- Suzuki K (2017) Overview of deep learning in medical imaging. Radiol Phys Technol 10:257-273. https://doi.org/10.1007/ s12194-017-0406-5

- Rodrigues JA, Krois J, Schwendicke F (2021) Demystifying artificial intelligence and deep learning in dentistry. Braz Oral Res 35. https://doi.org/10.1590/1807-3107bor-2021.vol35.0094

- Barr A, Feigenbaum EA, Cohen PR (1981) The handbook of artificial intelligence. In: Artificial Intelligence, William Kaufman Inc, California, pp 3-11

- Lee J-G, Jun S, Cho Y-W, Lee H, Kim GB, Seo JB, Kim N (2017) Deep learning in medical imaging: general overview. Korean J Radiol 18:570. https://doi.org/10.3348/kjr.2017.18.4.570

- LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521:436-444. https://doi.org/10.1038/nature14539

- Hubel DH, Wiesel TN (1968) Receptive fields and functional architecture of monkey striate cortex. J Physiol 195:215-243. https://doi.org/10.1113/jphysiol.1968.sp008455

- Schwendicke F, Samek W, Krois J (2020) Artificial intelligence in dentistry: chances and challenges. J Dent Res 99:769-774. https:// doi.org/10.1177/0022034520915714

- Bayraktar Y, Ayan E (2022) Diagnosis of interproximal caries lesions with deep convolutional neural network in digital bitewing radiographs. Clin Oral Investig 26:623-632. https://doi.org/ 10.1007/s00784-021-04040-1

- Cantu AG, Gehrung S, Krois J, Chaurasia A, Rossi JG, Gaudin R, Elhennawy K, Schwendicke F (2020) Detecting caries lesions of different radiographic extension on bitewings using deep learning. J Dent 100:103425. https://doi.org/10.1016/j.jdent.2020.103425

- Choi J, Eun H, Kim C (2018) Boosting proximal dental caries detection via combination of variational methods and convolutional neural network. J Signal Process Syst 90:87-97. https://doi. org/10.1007/s11265-016-1214-6

- Lee J-H, Kim D-H, Jeong S-N, Choi S-H (2018) Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm. J Dent 77:106-111. https://doi. org/10.1016/j.jdent.2018.07.015

- Moutselos K, Berdouses E, Oulis C, Maglogiannis I (2019) Recognizing occlusal caries in dental intraoral images using deep learning. In: 2019 41st Annu Int Conf IEEE Eng Med Biol Soc EMBC, IEEE, pp. 1617-1620. https://doi.org/10.1109/EMBC.2019.8856553

- Prajapati SA, Nagaraj R, Mitra S (2017) Classification of dental diseases using CNN and transfer learning. In: 2017 5th Int Symp Comput Bus Intell ISCBI, IEEE, pp. 70-74. https://doi.org/10. 1109/ISCBI.2017.8053547

- Vidnes-Kopperud S, Tveit AB, Espelid I (2011) Changes in the treatment concept for approximal caries from 1983 to 2009 in Norway. Caries Res 45:113-120. https://doi.org/10.1159/000324810

- Moran M, Faria M, Giraldi G, Bastos L, Oliveira L, Conci A (2021) Classification of approximal caries in bitewing radiographs using convolutional neural networks. Sensors 21:5192. https://doi. org/10.3390/s21155192

- Srivastava MM, Kumar P, Pradhan L, Varadarajan S (2017) Detection of tooth caries in bitewing radiographs using deep learning. http://arxiv.org/abs/1711.07312. Accessed 7 July 2023

- Leite AF, Gerven AV, Willems H, Beznik T, Lahoud P, GaêtaAraujo H, Vranckx M, Jacobs R (2021) Artificial intelligencedriven novel tool for tooth detection and segmentation on panoramic radiographs. Clin Oral Investig 25:2257-2267. https://doi. org/10.1007/s00784-020-03544-6

- Yasa Y, Çelik Ö, Bayrakdar IS, Pekince A, Orhan K, Akarsu S, Atasoy S, Bilgir E, Odabaş A, Aslan AF (2021) An artificial intelligence proposal to automatic teeth detection and numbering in dental bite-wing radiographs. Acta Odontol Scand 79:275-281. https://doi.org/10.1080/00016357.2020.1840624

- Bilgir E, Bayrakdar İŞ, Çelik Ö, Orhan K, Akkoca F, Sağlam H, Odabaş A, Aslan AF, Ozcetin C, Kıllı M, Rozylo-Kalinowska I (2021) An artificial intelligence approach to automatic tooth detection and numbering in panoramic radiographs. BMC Med Imaging 21:124. https://doi.org/10.1186/s12880-021-00656-7

- Kaya E, Gunec HG, Gokyay SS, Kutal S, Gulum S, Ates HF (2022) Proposing a CNN method for primary and permanent tooth detection and enumeration on pediatric dental radiographs. J Clin Pediatr Dent 46:293. https://doi.org/10.22514/ 1053-4625-46.4.6

- Kılıc MC, Bayrakdar IS, Çelik Ö, Bilgir E, Orhan K, Aydın OB, Kaplan FA, Sağlam H, Odabaş A, Aslan AF, Yılmaz AB (2021) Artificial intelligence system for automatic deciduous tooth detection and numbering in panoramic radiographs. Dentomaxillofacial Radiol 50:20200172. https://doi.org/10.1259/ dmfr. 20200172

- Tekin BY, Ozcan C, Pekince A, Yasa Y (2022) An enhanced tooth segmentation and numbering according to FDI notation in bitewing radiographs. Comput Biol Med 146:105547. https://doi.org/ 10.1016/j.compbiomed.2022.105547

- Tuzoff DV, Tuzova LN, Bornstein MM, Krasnov AS, Kharchenko MA, Nikolenko SI, Sveshnikov MM, Bednenko GB (2019) Tooth detection and numbering in panoramic radiographs using convolutional neural networks. Dentomaxillofacial Radiol 48:20180051. https://doi.org/10.1259/dmfr.20180051

- Topol EJ (2019) High-performance medicine: the convergence of human and artificial intelligence. Nat Med 25:44-56. https://doi. org/10.1038/s41591-018-0300-7

- Masood M, Masood Y, Newton JT (2015) The clustering effects of surfaces within the tooth and teeth within individuals. J Dent Res 94:281-288. https://doi.org/10.1177/0022034514559408

- Chen X, Guo J, Ye J, Zhang M, Liang Y (2022) Detection of proximal caries lesions on bitewing radiographs using deep learning method. Caries Res 56:455-463. https://doi.org/10.1159/00052 7418

- Huang G, Liu Z, van der Maaten L, Weinberger KQ (2016) densely connected convolutional networks. https://doi.org/10. 48550/ARXIV. 1608.06993

- Girshick R (2015) Fast R-CNN, In: 2015 IEEE Int. Conf. Comput. Vis. ICCV, IEEE, Santiago, Chile, pp. 1440-1448.https://doi.org/ 10.1109/ICCV.2015.169

- Ren S, He K, Girshick R, Sun J (2017) Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans Pattern Anal Mach Intell 39:1137-1149. https://doi.org/10. 1109/TPAMI.2016.2577031

- He K, Gkioxari G, Dollar P, Girshick R (2017) Mask R-CNN, In: 2017 IEEE Int Conf Comput Vis ICCV, IEEE, Venice, pp. 2980-2988.https://doi.org/10.1109/ICCV.2017.322

- Terven J, Cordova-Esparza D (2023) A Comprehensive Review of YOLO: From YOLOv1 and Beyond. http://arxiv.org/abs/2304. 00501 (accessed July 7, 2023)

- Wang C-Y, Bochkovskiy A, Liao H-YM (2022) YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. https://doi.org/10.48550/ARXIV.2207.02696

- Ding X, Zhang X, Ma N, Han J, Ding G, Sun J (2021) RepVGG: Making VGG-style ConvNets Great Again, In: 2021 IEEECVF Conf Comput Vis Pattern Recognit CVPR, IEEE, Nashville, TN, USA, pp. 13728-13737.https://doi.org/10.1109/CVPR46437. 2021.01352

- Larochelle H, Hinton G (2010) Learning to combine foveal glimpses with a third-order Boltzmann machine. Adv Neural Inform Process Syst 23:1243-1251

- Woo S, Park J, Lee J-Y, Kweon IS (2018) CBAM: Convolutional Block Attention Module, In: Ferrari V, Hebert M, Sminchisescu C, Weiss Y (Eds.). Comput Vis-ECCV 2018, Springer International Publishing, Cham, pp. 3-19. https://doi.org/10.1007/ 978-3-030-01234-2_1

- Shi J, Yang J, Zhang Y (2022) Research on steel surface defect detection based on YOLOv5 with attention mechanism. Electronics 11:3735. https://doi.org/10.3390/electronics11223735

- Xue Z, Xu R, Bai D, Lin H (2023) YOLO-tea: a tea disease detection model improved by YOLOv5. Forests 14:415. https:// doi.org/10.3390/f14020415

- De Moraes JL, De Oliveira Neto J, Badue C, Oliveira-Santos T, De Souza AF (2023) Yolo-papaya: a papaya fruit disease detector and classifier using cnns and convolutional block attention modules. Electronics 12:2202. https://doi.org/10.3390/elect ronics12102202

- Yan J, Zhou Z, Zhou D, Su B, Xuanyuan Z, Tang J, Lai Y, Chen J, Liang W (2022) Underwater object detection algorithm based on attention mechanism and cross-stage partial fast spatial pyramidal pooling. Front Mar Sci 9:1056300. https://doi.org/10. 3389/fmars.2022.1056300

- Paszke A, Gross S, Massa F, Lerer A, Bradbury J, Chanan G, Killeen T, Lin Z, Gimelshein N, Antiga L, Desmaison A, Kopf A, Yang E, DeVito Z, Raison M, Tejani A, Chilamkurthy S, Steiner B, Fang L, Bai J, Chintala S (2019) PyTorch: an imperative style, high-performance deep learning library. Adv Neural Inf Process Syst 32:8024-8035

- Chollet F et al (2015) Keras. https://github.com/fchollet/keras. Accessed 7 July 2023

- Bradski G (2000) The OpenCV library. Dr. Dobb’s Journal: Software Tools for the Professional Programmer 25:120-123

- Zhang Y, Li J, Fu W, Ma J, Wang G (2023) A lightweight YOLOv7 insulator defect detection algorithm based on DSC-SE. PLoS One 18:e0289162. https://doi.org/10.1371/journal.pone. 0289162

- Gomez J (2015) Detection and diagnosis of the early caries lesion. BMC Oral Health 15:S3. https://doi.org/10.1186/ 1472-6831-15-S1-S3

- Yasaka K, Akai H, Kunimatsu A, Kiryu S, Abe O (2018) Deep learning with convolutional neural network in radiology. Jpn J Radiol 36:257-272. https://doi.org/10.1007/s11604-018-0726-3

- Chen H, Zhang K, Lyu P, Li H, Zhang L, Wu J, Lee C-H (2019) A deep learning approach to automatic teeth detection and numbering based on object detection in dental periapical films. Sci Rep 9:3840. https://doi.org/10.1038/s41598-019-40414-y

- Casalegno F, Newton T, Daher R, Abdelaziz M, Lodi-Rizzini A, Schürmann F, Krejci I, Markram H (2019) Caries detection with near-infrared transillumination using deep learning. J Dent Res 98:1227-1233. https://doi.org/10.1177/0022034519871884

- Fukuda M, Inamoto K, Shibata N, Ariji Y, Yanashita Y, Kutsuna S, Nakata K, Katsumata A, Fujita H, Ariji E (2020) Evaluation of an artificial intelligence system for detecting vertical root fracture on panoramic radiography. Oral Radiol 36:337-343. https://doi. org/10.1007/s11282-019-00409-x

- Lee J-H, Kim D, Jeong S-N, Choi S-H (2018) Diagnosis and prediction of periodontally compromised teeth using a deep learn-ing-based convolutional neural network algorithm. J Periodontal Implant Sci 48:114. https://doi.org/10.5051/jpis.2018.48.2.114

- Murata M, Ariji Y, Ohashi Y, Kawai T, Fukuda M, Funakoshi T, Kise Y, Nozawa M, Katsumata A, Fujita H, Ariji E (2019) Deeplearning classification using convolutional neural network for evaluation of maxillary sinusitis on panoramic radiography. Oral Radiol 35:301-307. https://doi.org/10.1007/s11282-018-0363-7

- Park J-H, Hwang H-W, Moon J-H, Yu Y, Kim H, Her S-B, Srinivasan G, Aljanabi MNA, Donatelli RE, Lee S-J (2019) Automated identification of cephalometric landmarks: Part 1-Comparisons between the latest deep-learning methods YOLOV3 and SSD. Angle Orthod 89:903-909. https://doi.org/10.2319/022019-127.1

- Pauwels R, Brasil DM, Yamasaki MC, Jacobs R, Bosmans H, Freitas DQ, Haiter-Neto F (2021) Artificial intelligence for detection of periapical lesions on intraoral radiographs: Comparison between convolutional neural networks and human observers, Oral Surg. Oral Med Oral Pathol Oral Radiol 131:610-616. https://doi. org/10.1016/j.oooo.2021.01.018

- Rockenbach MI, Veeck EB, da Costa NP (2008) Detection of proximal caries in conventional and digital radiographs: an in vitro study. Stomatologija 10:115-120

- Abdinian M, Razavi SM, Faghihian R, Samety AA, Faghihian E (2015) Accuracy of digital bitewing radiography versus different views of digital panoramic radiography for detection of proximal caries. J Dent Tehran Iran 12:290-297

- Hintze H (1993) Screening with conventional and digital bitewing radiography compared to clinical examination alone for caries detection in low-risk children. Caries Res 27:499-504. https:// doi.org/10.1159/000261588

- Peker İ, ToramanAlkurt M, Altunkaynak B (2007) Film tomography compared with film and digital bitewing radiography for

proximal caries detection. Dentomaxillofacial Radiol 36:495-499. https://doi.org/10.1259/dmfr/13319800 - Schaefer G, Pitchika V, Litzenburger F, Hickel R, Kühnisch J (2018) Evaluation of occlusal caries detection and assessment by visual inspection, digital bitewing radiography and near-infrared light transillumination. Clin Oral Investig 22:2431-2438. https:// doi.org/10.1007/s00784-018-2512-0

- Wenzel A (2004) Bitewing and digital bitewing radiography for detection of caries lesions. J Dent Res 83:72-75. https://doi.org/ 10.1177/154405910408301s14

- Mahoor MH, Abdel-Mottaleb M (2005) Classification and numbering of teeth in dental bitewing images. Pattern Recognit 38:577-586. https://doi.org/10.1016/j.patcog.2004.08.012

- Reed BE, Polson AM (1984) Relationships between bitewing and periapical radiographs in assessing Crestal Alveolar Bone Levels. J Periodontol 55:22-27. https://doi.org/10.1902/jop.1984.55.1.22

- Shaheen E, Leite A, Alqahtani KA, Smolders A, Van Gerven A, Willems H, Jacobs R (2021) A novel deep learning system for multi-class tooth segmentation and classification on cone beam computed tomography. A validation study. J Dent 115:103865. https://doi.org/10.1016/j.jdent.2021.103865

- Zhang K, Wu J, Chen H, Lyu P (2018) An effective teeth recognition method using label tree with cascade network structure. Comput Med Imaging Graph 68:61-70. https://doi.org/10.1016/j. compmedimag.2018.07.001

- Mao Y-C, Chen T-Y, Chou H-S, Lin S-Y, Liu S-Y, Chen Y-A, Liu Y-L, Chen C-A, Huang Y-C, Chen S-L, Li C-W, Abu PAR, Chiang W-Y (2021) Caries and restoration detection using bitewing film based on transfer learning with CNNs. Sensors 21:4613. https:// doi.org/10.3390/s21134613

- Koppanyi Z, Iwaszczuk D, Zha B, Saul CJ, Toth CK, Yilmaz A (2019) Multimodal semantic segmentation: fusion of rgb and depth data in convolutional neural networks, in: multimodal scene underst., Elsevier, pp. 41-64. https://doi.org/10.1016/B978-0-12-817358-9.00009-3

- Subka S, Rodd H, Nugent Z, Deery C (2019) In vivo validity of proximal caries detection in primary teeth, with histological validation. Int J Paediatr Dent 29:429-438. https://doi.org/10.1111/ipd. 12478

- Baturalp Ayhan

baturalpayhan@kku.edu.tr

Enes Ayan

enesayan@kku.edu.tr

Yusuf Bayraktar

yusufbayraktar@kku.edu.tr

DOI: https://doi.org/10.1007/s00784-024-05566-w

PMID: https://pubmed.ncbi.nlm.nih.gov/38411726

Publication Date: 2024-02-27

A novel deep learning-based perspective for tooth numbering and caries detection

© The Author(s) 2024

Abstract

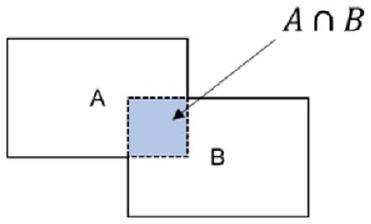

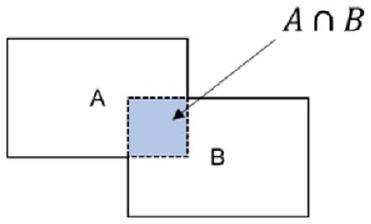

Objectives The aim of this study was automatically detecting and numbering teeth in digital bitewing radiographs obtained from patients, and evaluating the diagnostic efficiency of decayed teeth in real time, using deep learning algorithms. Methods The dataset consisted of 1170 anonymized digital bitewing radiographs randomly obtained from faculty archives. After image evaluation and labeling process, the dataset was split into training and test datasets. This study proposed an end-to-end pipeline architecture consisting of three stages for matching tooth numbers and caries lesions to enhance treatment outcomes and prevent potential issues. Initially, a pre-trained convolutional neural network (CNN) utilized to determine the side of the bitewing images. Then, an improved CNN model YOLOv7 was proposed for tooth numbering and caries detection. In the final stage, our developed algorithm assessed which teeth have caries by comparing the numbered teeth with the detected caries, using the intersection over union value for the matching process. Results According to test results, the recall, precision, and F1-score values were

Introduction

method which is insufficient in the diagnosis of early approximal caries detection is one of the most basic of them [5, 6]. In addition to the visual-tactile examination method, radiological examination which is commonly employed in intraoral imaging plays a crucial role in dental practice. In this regard, panoramic, periapical and bitewing radiography are regularly utilized in practice. Visualization and evaluation of dental status on radiographs are one of the most important steps in disease diagnosis [7, 8]. Today, with the help of digital bitewing radiographs, which have high recall and specificity in the detection of approximal caries, the detection of caries lesions is done easily, cheaply and successfully compared to many methods [9, 10].

practice of the physician [35]. To the best of our knowledge, no study has been investigated that teeth detection, numbering, and caries detection at the same time in digital bitewing radiographs utilizing a CNN approach based on DL. The proposed method makes this study unique compared to existing literature.

Material and methods

Sample size determination

Image dataset

| Train | Test | |

| Decay | 2289 | 602 |

| Numbering | 9344 | 1679 |

| Total surfaces | 16,674 | 2842 |

| Lesion prevalence | 24% | 21.16% |

| Total image | 1000 | 170 |

Study design

Image evaluation and data labeling

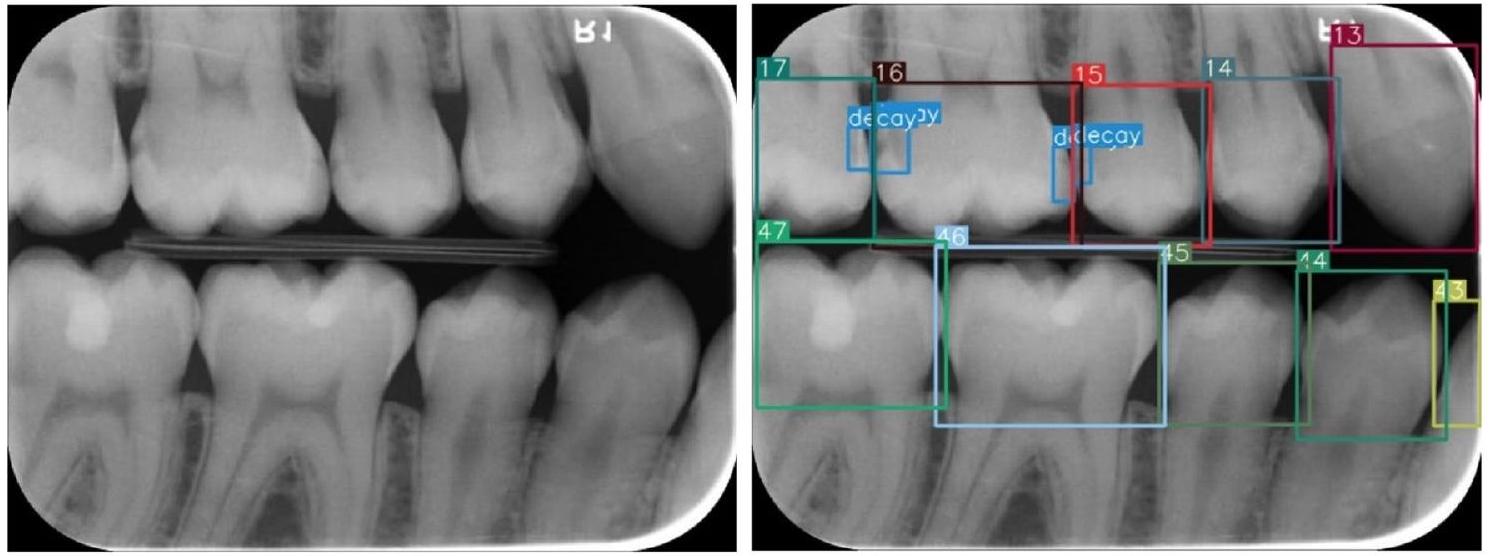

to Federation Dentaire International (FDI) tooth notation system (16-17-18-26-27-28-36-37-38-46-47-48 (molars), 14-15-24-25-34-35-44-45 (premolars) and 13-23-33-43 (canines)). Also, caries lesions labeled as decay in digital bitewing images. While in the data labeling procedure,

annotators reviewed the bitewing images at the same time and as a result of the agreement, labeling was processed (Fig. 3).

Detecting bitewing image’s side via convolutional neural network

with sigmoid activation function. The proposed model trained for 30 epochs with 32 batch size, 0.001 initial learning rate. Binary cross entropy was used as the error function and Adam algorithm was preferred for the optimizer.

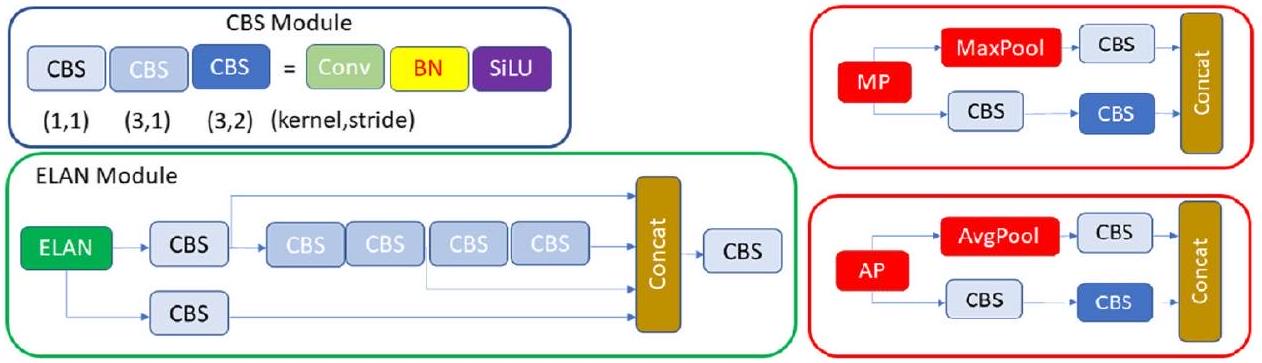

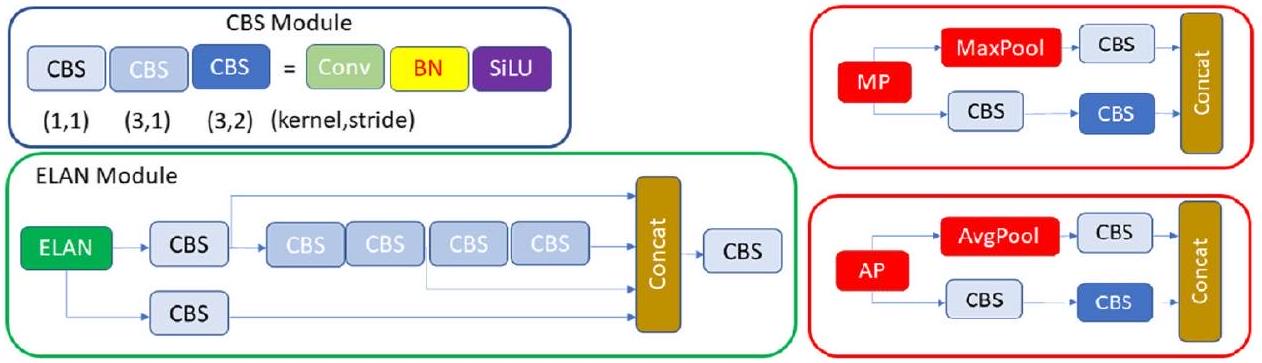

Improved YOLOv7 architecture, teeth numbering and caries detection

The architecture of original YOLOv7

and a lower branch and consisting of CBS modules and the max pooling (MaxPool) layer (Fig. 4). In this module, the upper branch utilizes MaxPool to capture the maximum value information from small local areas, while the CBS modules in the lower branch extract all valuable information from small local areas. This dual approach enhances the network’s feature extraction capability.

Improved YOLOv7 with convolutional block attention module

the object within the image, thereby enabling more precise channel attention. These generated context descriptors are forwarded to a shared multi-layer network. After the vector obtained from the multilayer network is collected, the channel attention map is generated by applying the sigmoid function. Within the CBAM module, the spatial attention module (SAM) specifically targets the localization of the most informative regions within the input image. Spatial attention computation within the SAM module involves the application of average pooling and max pooling techniques along the channel axis of the input image. The obtained twochannel image is subjected to convolutional processing to convert it into a single-channel feature map, which is then merged and forwarded to a standard convolutional layer to create an efficient feature descriptor. The output from the convolutional layer is passed through a sigmoid activation layer. The Sigmoid function, which is a probability-based activation, transforms all values to a range between 0 and 1 , thereby revealing the spatial attention map. In summary, CBAM combines the two attention modules described above to determine what is important in the input image and to locate the descriptive regions within the image. Figures of CBAM, CAM and SAM modules are given in Fig. 6.

method was used instead of the max pooling. Max pooling returns the maximum value in each window, while average pooling returns the average value in each window. The benefit of using average pooling instead of max pooling is that it can help reduce overfitting. Max pooling is more likely to memorize as it preserves only the strongest feature in each window that may not represent the entire window. On the other hand, average pooling considers all features in each window, which can help preserve more information. Additionally, average pooling can help smooth out the output, which can be useful when the input is noisy or volatile. In this context, the changes we made are given in Fig. 7. It has been observed that this improvement has a positive effect on detection performance.

Evaluation of teeth numbering and caries detection matching

Experimental setup

Performance evaluation analysis

Adaptation of the confusion matrix for evaluating performance of

teeth numbering and caries detection matching

TP: the output in which the model correctly predicts the positive

class (teeth correctly detected, correctly numbered, caries correctly

detected on bitewing radiographs)

FP: the output in which the model incorrectly predicts the positive

class (teeth correctly detected, correctly numbered, but caries pre-

diction on sound surface; teeth correctly detected, but regardless of

caries incorrectly numbered)

FN: the output in which the model incorrectly predicts the negative

class (teeth correctly detected, correctly numbered, but no caries

prediction on decayed surface)

TN: the output in which the model correctly predicts the negative

class (teeth correctly detected, correctly numbered, no caries pre-

diction when there was no caries)

Results

Ablation Experiments

| Recall | Precision | F1-score | |

| Left side | 0.995 | 0.987 | 0.99 |

| Right side | 0.993 | 0.988 | 0.99 |

| All | 0.994 | 0.987 | 0.99 |

| Recall | Precision | F1-score | |

| Left side teeth numbering | 0.976 | 0.978 | 0.977 |

| Right side teeth numbering | 0.972 | 0.993 | 0.982 |

| Left side caries detection | 0.857 | 0.833 | 0.844 |

| Right side caries detection | 0.810 | 0.900 | 0.800 |

| Left side-numbering and caries detection | 0.966 | 0.966 | 0.966 |

| Right side-numbering and caries detection | 0.959 | 0.985 | 0.968 |

| Left and right-side numbering and caries detec-tion-All | 0.962 | 0.975 | 0.967 |

| Teeth numbering-All | 0.974 | 0.985 | 0.979 |

| Caries detection-All | 0.833 | 0.866 | 0.849 |

| TP | FP | FN | TN | Accuracy | Recall | Specificity | Precision | F1-score | |

| Left side | 240 | 48 | 47 | 1102 | 0.933 | 0.836 | 0.958 | 0.833 | 0.834 |

| Right side | 260 | 30 | 66 | 1049 | 0.931 | 0.797 | 0.972 | 0.896 | 0.843 |

| All | 500 | 78 | 113 | 2151 | 0.932 | 0.815 | 0.965 | 0.865 | 0.839 |

parameters 103.8 GFLOPS and 75.2 MB size in memory. A satisfactory performance increase was achieved with a small increase in complexity in the model.

| Recall | Precision | F1-score | ||||

| Original YOLOv7-MP without splitting into right and left | Decay all | 0.676 | Decay all | 0.707 | Decay all | 0.691 |

| Numbering all | 0.409 | Numbering all | 0.236 | Numbering all | 0.299 | |

| Original YOLOv7-MP with splitting into right and left | Right side decay | 0.659 | Right side decay | 0.803 | ||

| Left side decay | 0.696 | Left side decay | 0.848 | |||

| Decay all | 0.677 | Decay all | 0.825 | Decay all | 0.743 | |

| Right side numbering | 0.971 | Right side numbering | 0.974 | |||

| Left side numbering | 0.979 | Left side numbering | 0.978 | |||

| Numbering all | 0.975 | Numbering all | 0.976 | Numbering all | 0.975 | |

| YOLOv7-AP | Right side decay | 0.626 | Right side decay | 0.773 | ||

| Left side decay | 0.797 | Left side decay | 0.775 | |||

| Decay all | 0.711 | Decay all | 0.774 | Decay all | 0.741 | |

| Right side numbering | 0.967 | Right side numbering | 0.977 | |||

| Left side numbering | 0.981 | Left side numbering | 0.983 | |||

| Numbering all | 0.974 | Numbering all | 0.980 | Numbering all | 0.976 | |

| YOLOv7-MP-CBAM | Right side decay | 0.579 | Right side decay | 0.838 | ||

| Left side decay | 0.754 | Left side decay | 0.832 | |||

| Decay all | 0.666 | Decay all | 0.836 | Decay all | 0.741 | |

| Right side numbering | 0.974 | Right side numbering | 0.983 | |||

| Left side numbering | 0.981 | Left side numbering | 0.978 | |||

| Numbering all | 0.977 | Numbering all | 0.980 | Numbering all | 0.978 | |

| YOLOv7-AP-CBAM (ours) | Right side decay | 0.810 | Right side decay | 0.900 | All decay | 0.849 |

| Left side decay | 0.857 | Left side decay | 0.833 | |||

| All decay | 0.833 | All decay | 0.866 | |||

| Right side numbering | 0.972 | Right side numbering | 0.993 | |||

| Left side numbering | 0.976 | Left side numbering | 0.978 | |||

| Numbering all | 0.974 | Numbering all | 0.985 | Numbering all | 0.979 |

Discussion

to its outstanding problem-solving capabilities. Within this context, numerous DL architectures have been developed, with especially CNNs standing out for their impressive performance in image recognition tasks [56]. By utilizing CNN models, practitioners can effectively enhance work efficiency and achieve precise outcomes in the accurate diagnostic process [34, 57].

on the left side in the training group. To avoid this problem, new bitewing images including caries may be added to the right-side training set. Dataset bias is another problem that occurs when specific samples in a dataset are over or under-represented. In order to avoid this problem, the dataset was generated images with taken from the same devices in various clinics with different resolutions and exposure times. However, the images obtained from different x-ray machines and from people with different demographic characteristics may affect the results. The proposed model’s parameters were determined using validation data during the training phase, and the model’s performance on unseen data was evaluated using different test data from the training and validation data. CNN models require high hardware requirements, especially in the training phase. In our study, we performed our training and tests on 1080 Ti graphic card. Computational performance will be higher on more advanced graphics cards. The dental caries lesions that were not evaluated using the gold standard method of histological assessment detected and labeled by the annotators on the radiographic images [76]. The model encountered challenges in accurately predicting teeth positioned at the corners of the bitewing image, as opposed to other teeth. This could be related to the reduced clarity of these specific teeth and the limited amount of data in the training set. Also, the improved YOLOv7-AP-CBAM model was not compared with an expert. Despite the limitations of the study, it is worth noting that there are several significant strengths present as well. The present study offered a comprehensive analysis by integrating the results of tooth detection, numbering, and caries detection. This comprehensive approach facilitated a more cohesive comprehension of the outcomes. Additionally, the experimental design included the split of data into right and left sides, enabling a more detailed analysis. This approach enhanced the performance metrics of our results. Furthermore, by adopting an interdisciplinary approach, our study introduced a novel perspective and makes an innovative contribution to previous papers.

Conclusion

revealed that CNNs can provide valuable support to clinicians by automating the detection and numbering of teeth, as well as detection of caries on bitewing radiographs. This can potentially contribute for assessment, improve overall performance, and ultimately save precious time.

E.A: Conceptualization, Software, Visualization, Data curation, Formal analysis, Writing – original draft, Writing – review & editing.

Y.B: Conceptualization, Methodology, Data acquisition and labeling, Writing – review & editing.

Declarations

References

- Featherstone JDB (2000) The science and practice of caries prevention. J Am Dent Assoc 131:887-899. https://doi.org/10.14219/ jada.archive.2000.0307

- Mortensen D, Dannemand K, Twetman S, Keller MK (2014) Detection of non-cavitated occlusal caries with impedance spectroscopy and laser fluorescence: an in vitro study. Open Dent J 8:28-32. https://doi.org/10.2174/1874210601408010028

- Pitts NB (2004) Are we ready to move from operative to nonoperative/preventive treatment of dental caries in clinical practice? Caries Res 38:294-304. https://doi.org/10.1159/000077769

- Baelum V, Heidmann J, Nyvad B (2006) Dental caries paradigms in diagnosis and diagnostic research. Eur J Oral Sci 114:263-277. https://doi.org/10.1111/j.1600-0722.2006.00383.x

- Pitts NB, Stamm JW (2004) International consensus workshop on caries clinical trials (ICW-CCT)-final consensus statements: agreeing where the evidence leads. J Dent Res 83:125-128. https://doi.org/10.1177/154405910408301s27

- Selwitz RH, Ismail AI, Pitts NB (2007) Dental caries. The Lancet 369:51-59. https://doi.org/10.1016/S0140-6736(07)60031-2

- Chan M, Dadul T, Langlais R, Russell D, Ahmad M (2018) Accuracy of extraoral bite-wing radiography in detecting proximal caries and crestal bone loss. J Am Dent Assoc 149:51-58. https://doi. org/10.1016/j.adaj.2017.08.032

- Vandenberghe B, Jacobs R, Bosmans H (2010) Modern dental imaging: a review of the current technology and clinical applications in dental practice. Eur Radiol 20:2637-2655. https://doi.org/ 10.1007/s00330-010-1836-1

- Baelum V (2010) What is an appropriate caries diagnosis? Acta Odontol Scand 68:65-79. https://doi.org/10.3109/00016350903530786

- Kamburoğlu K, Kolsuz E, Murat S, Yüksel S, Özen T (2012) Proximal caries detection accuracy using intraoral bitewing radiography, extraoral bitewing radiography and panoramic radiography. Dentomaxillofacial Radiol 41:450-459. https:// doi.org/10.1259/dmfr/30526171

- Hung K, Montalvao C, Tanaka R, Kawai T, Bornstein MM (2020) The use and performance of artificial intelligence applications in dental and maxillofacial radiology: A systematic review. Dentomaxillofacial Radiol 49:20190107. https://doi. org/10.1259/dmfr. 20190107

- Suzuki K (2017) Overview of deep learning in medical imaging. Radiol Phys Technol 10:257-273. https://doi.org/10.1007/ s12194-017-0406-5

- Rodrigues JA, Krois J, Schwendicke F (2021) Demystifying artificial intelligence and deep learning in dentistry. Braz Oral Res 35. https://doi.org/10.1590/1807-3107bor-2021.vol35.0094

- Barr A, Feigenbaum EA, Cohen PR (1981) The handbook of artificial intelligence. In: Artificial Intelligence, William Kaufman Inc, California, pp 3-11

- Lee J-G, Jun S, Cho Y-W, Lee H, Kim GB, Seo JB, Kim N (2017) Deep learning in medical imaging: general overview. Korean J Radiol 18:570. https://doi.org/10.3348/kjr.2017.18.4.570

- LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521:436-444. https://doi.org/10.1038/nature14539

- Hubel DH, Wiesel TN (1968) Receptive fields and functional architecture of monkey striate cortex. J Physiol 195:215-243. https://doi.org/10.1113/jphysiol.1968.sp008455

- Schwendicke F, Samek W, Krois J (2020) Artificial intelligence in dentistry: chances and challenges. J Dent Res 99:769-774. https:// doi.org/10.1177/0022034520915714

- Bayraktar Y, Ayan E (2022) Diagnosis of interproximal caries lesions with deep convolutional neural network in digital bitewing radiographs. Clin Oral Investig 26:623-632. https://doi.org/ 10.1007/s00784-021-04040-1

- Cantu AG, Gehrung S, Krois J, Chaurasia A, Rossi JG, Gaudin R, Elhennawy K, Schwendicke F (2020) Detecting caries lesions of different radiographic extension on bitewings using deep learning. J Dent 100:103425. https://doi.org/10.1016/j.jdent.2020.103425

- Choi J, Eun H, Kim C (2018) Boosting proximal dental caries detection via combination of variational methods and convolutional neural network. J Signal Process Syst 90:87-97. https://doi. org/10.1007/s11265-016-1214-6

- Lee J-H, Kim D-H, Jeong S-N, Choi S-H (2018) Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm. J Dent 77:106-111. https://doi. org/10.1016/j.jdent.2018.07.015

- Moutselos K, Berdouses E, Oulis C, Maglogiannis I (2019) Recognizing occlusal caries in dental intraoral images using deep learning. In: 2019 41st Annu Int Conf IEEE Eng Med Biol Soc EMBC, IEEE, pp. 1617-1620. https://doi.org/10.1109/EMBC.2019.8856553

- Prajapati SA, Nagaraj R, Mitra S (2017) Classification of dental diseases using CNN and transfer learning. In: 2017 5th Int Symp Comput Bus Intell ISCBI, IEEE, pp. 70-74. https://doi.org/10. 1109/ISCBI.2017.8053547

- Vidnes-Kopperud S, Tveit AB, Espelid I (2011) Changes in the treatment concept for approximal caries from 1983 to 2009 in Norway. Caries Res 45:113-120. https://doi.org/10.1159/000324810

- Moran M, Faria M, Giraldi G, Bastos L, Oliveira L, Conci A (2021) Classification of approximal caries in bitewing radiographs using convolutional neural networks. Sensors 21:5192. https://doi. org/10.3390/s21155192

- Srivastava MM, Kumar P, Pradhan L, Varadarajan S (2017) Detection of tooth caries in bitewing radiographs using deep learning. http://arxiv.org/abs/1711.07312. Accessed 7 July 2023

- Leite AF, Gerven AV, Willems H, Beznik T, Lahoud P, GaêtaAraujo H, Vranckx M, Jacobs R (2021) Artificial intelligencedriven novel tool for tooth detection and segmentation on panoramic radiographs. Clin Oral Investig 25:2257-2267. https://doi. org/10.1007/s00784-020-03544-6

- Yasa Y, Çelik Ö, Bayrakdar IS, Pekince A, Orhan K, Akarsu S, Atasoy S, Bilgir E, Odabaş A, Aslan AF (2021) An artificial intelligence proposal to automatic teeth detection and numbering in dental bite-wing radiographs. Acta Odontol Scand 79:275-281. https://doi.org/10.1080/00016357.2020.1840624

- Bilgir E, Bayrakdar İŞ, Çelik Ö, Orhan K, Akkoca F, Sağlam H, Odabaş A, Aslan AF, Ozcetin C, Kıllı M, Rozylo-Kalinowska I (2021) An artificial intelligence approach to automatic tooth detection and numbering in panoramic radiographs. BMC Med Imaging 21:124. https://doi.org/10.1186/s12880-021-00656-7

- Kaya E, Gunec HG, Gokyay SS, Kutal S, Gulum S, Ates HF (2022) Proposing a CNN method for primary and permanent tooth detection and enumeration on pediatric dental radiographs. J Clin Pediatr Dent 46:293. https://doi.org/10.22514/ 1053-4625-46.4.6

- Kılıc MC, Bayrakdar IS, Çelik Ö, Bilgir E, Orhan K, Aydın OB, Kaplan FA, Sağlam H, Odabaş A, Aslan AF, Yılmaz AB (2021) Artificial intelligence system for automatic deciduous tooth detection and numbering in panoramic radiographs. Dentomaxillofacial Radiol 50:20200172. https://doi.org/10.1259/ dmfr. 20200172

- Tekin BY, Ozcan C, Pekince A, Yasa Y (2022) An enhanced tooth segmentation and numbering according to FDI notation in bitewing radiographs. Comput Biol Med 146:105547. https://doi.org/ 10.1016/j.compbiomed.2022.105547

- Tuzoff DV, Tuzova LN, Bornstein MM, Krasnov AS, Kharchenko MA, Nikolenko SI, Sveshnikov MM, Bednenko GB (2019) Tooth detection and numbering in panoramic radiographs using convolutional neural networks. Dentomaxillofacial Radiol 48:20180051. https://doi.org/10.1259/dmfr.20180051

- Topol EJ (2019) High-performance medicine: the convergence of human and artificial intelligence. Nat Med 25:44-56. https://doi. org/10.1038/s41591-018-0300-7

- Masood M, Masood Y, Newton JT (2015) The clustering effects of surfaces within the tooth and teeth within individuals. J Dent Res 94:281-288. https://doi.org/10.1177/0022034514559408

- Chen X, Guo J, Ye J, Zhang M, Liang Y (2022) Detection of proximal caries lesions on bitewing radiographs using deep learning method. Caries Res 56:455-463. https://doi.org/10.1159/00052 7418

- Huang G, Liu Z, van der Maaten L, Weinberger KQ (2016) densely connected convolutional networks. https://doi.org/10. 48550/ARXIV. 1608.06993

- Girshick R (2015) Fast R-CNN, In: 2015 IEEE Int. Conf. Comput. Vis. ICCV, IEEE, Santiago, Chile, pp. 1440-1448.https://doi.org/ 10.1109/ICCV.2015.169

- Ren S, He K, Girshick R, Sun J (2017) Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans Pattern Anal Mach Intell 39:1137-1149. https://doi.org/10. 1109/TPAMI.2016.2577031

- He K, Gkioxari G, Dollar P, Girshick R (2017) Mask R-CNN, In: 2017 IEEE Int Conf Comput Vis ICCV, IEEE, Venice, pp. 2980-2988.https://doi.org/10.1109/ICCV.2017.322

- Terven J, Cordova-Esparza D (2023) A Comprehensive Review of YOLO: From YOLOv1 and Beyond. http://arxiv.org/abs/2304. 00501 (accessed July 7, 2023)

- Wang C-Y, Bochkovskiy A, Liao H-YM (2022) YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. https://doi.org/10.48550/ARXIV.2207.02696

- Ding X, Zhang X, Ma N, Han J, Ding G, Sun J (2021) RepVGG: Making VGG-style ConvNets Great Again, In: 2021 IEEECVF Conf Comput Vis Pattern Recognit CVPR, IEEE, Nashville, TN, USA, pp. 13728-13737.https://doi.org/10.1109/CVPR46437. 2021.01352

- Larochelle H, Hinton G (2010) Learning to combine foveal glimpses with a third-order Boltzmann machine. Adv Neural Inform Process Syst 23:1243-1251

- Woo S, Park J, Lee J-Y, Kweon IS (2018) CBAM: Convolutional Block Attention Module, In: Ferrari V, Hebert M, Sminchisescu C, Weiss Y (Eds.). Comput Vis-ECCV 2018, Springer International Publishing, Cham, pp. 3-19. https://doi.org/10.1007/ 978-3-030-01234-2_1

- Shi J, Yang J, Zhang Y (2022) Research on steel surface defect detection based on YOLOv5 with attention mechanism. Electronics 11:3735. https://doi.org/10.3390/electronics11223735

- Xue Z, Xu R, Bai D, Lin H (2023) YOLO-tea: a tea disease detection model improved by YOLOv5. Forests 14:415. https:// doi.org/10.3390/f14020415

- De Moraes JL, De Oliveira Neto J, Badue C, Oliveira-Santos T, De Souza AF (2023) Yolo-papaya: a papaya fruit disease detector and classifier using cnns and convolutional block attention modules. Electronics 12:2202. https://doi.org/10.3390/elect ronics12102202

- Yan J, Zhou Z, Zhou D, Su B, Xuanyuan Z, Tang J, Lai Y, Chen J, Liang W (2022) Underwater object detection algorithm based on attention mechanism and cross-stage partial fast spatial pyramidal pooling. Front Mar Sci 9:1056300. https://doi.org/10. 3389/fmars.2022.1056300

- Paszke A, Gross S, Massa F, Lerer A, Bradbury J, Chanan G, Killeen T, Lin Z, Gimelshein N, Antiga L, Desmaison A, Kopf A, Yang E, DeVito Z, Raison M, Tejani A, Chilamkurthy S, Steiner B, Fang L, Bai J, Chintala S (2019) PyTorch: an imperative style, high-performance deep learning library. Adv Neural Inf Process Syst 32:8024-8035

- Chollet F et al (2015) Keras. https://github.com/fchollet/keras. Accessed 7 July 2023

- Bradski G (2000) The OpenCV library. Dr. Dobb’s Journal: Software Tools for the Professional Programmer 25:120-123

- Zhang Y, Li J, Fu W, Ma J, Wang G (2023) A lightweight YOLOv7 insulator defect detection algorithm based on DSC-SE. PLoS One 18:e0289162. https://doi.org/10.1371/journal.pone. 0289162

- Gomez J (2015) Detection and diagnosis of the early caries lesion. BMC Oral Health 15:S3. https://doi.org/10.1186/ 1472-6831-15-S1-S3

- Yasaka K, Akai H, Kunimatsu A, Kiryu S, Abe O (2018) Deep learning with convolutional neural network in radiology. Jpn J Radiol 36:257-272. https://doi.org/10.1007/s11604-018-0726-3

- Chen H, Zhang K, Lyu P, Li H, Zhang L, Wu J, Lee C-H (2019) A deep learning approach to automatic teeth detection and numbering based on object detection in dental periapical films. Sci Rep 9:3840. https://doi.org/10.1038/s41598-019-40414-y

- Casalegno F, Newton T, Daher R, Abdelaziz M, Lodi-Rizzini A, Schürmann F, Krejci I, Markram H (2019) Caries detection with near-infrared transillumination using deep learning. J Dent Res 98:1227-1233. https://doi.org/10.1177/0022034519871884

- Fukuda M, Inamoto K, Shibata N, Ariji Y, Yanashita Y, Kutsuna S, Nakata K, Katsumata A, Fujita H, Ariji E (2020) Evaluation of an artificial intelligence system for detecting vertical root fracture on panoramic radiography. Oral Radiol 36:337-343. https://doi. org/10.1007/s11282-019-00409-x

- Lee J-H, Kim D, Jeong S-N, Choi S-H (2018) Diagnosis and prediction of periodontally compromised teeth using a deep learn-ing-based convolutional neural network algorithm. J Periodontal Implant Sci 48:114. https://doi.org/10.5051/jpis.2018.48.2.114

- Murata M, Ariji Y, Ohashi Y, Kawai T, Fukuda M, Funakoshi T, Kise Y, Nozawa M, Katsumata A, Fujita H, Ariji E (2019) Deeplearning classification using convolutional neural network for evaluation of maxillary sinusitis on panoramic radiography. Oral Radiol 35:301-307. https://doi.org/10.1007/s11282-018-0363-7

- Park J-H, Hwang H-W, Moon J-H, Yu Y, Kim H, Her S-B, Srinivasan G, Aljanabi MNA, Donatelli RE, Lee S-J (2019) Automated identification of cephalometric landmarks: Part 1-Comparisons between the latest deep-learning methods YOLOV3 and SSD. Angle Orthod 89:903-909. https://doi.org/10.2319/022019-127.1

- Pauwels R, Brasil DM, Yamasaki MC, Jacobs R, Bosmans H, Freitas DQ, Haiter-Neto F (2021) Artificial intelligence for detection of periapical lesions on intraoral radiographs: Comparison between convolutional neural networks and human observers, Oral Surg. Oral Med Oral Pathol Oral Radiol 131:610-616. https://doi. org/10.1016/j.oooo.2021.01.018

- Rockenbach MI, Veeck EB, da Costa NP (2008) Detection of proximal caries in conventional and digital radiographs: an in vitro study. Stomatologija 10:115-120

- Abdinian M, Razavi SM, Faghihian R, Samety AA, Faghihian E (2015) Accuracy of digital bitewing radiography versus different views of digital panoramic radiography for detection of proximal caries. J Dent Tehran Iran 12:290-297

- Hintze H (1993) Screening with conventional and digital bitewing radiography compared to clinical examination alone for caries detection in low-risk children. Caries Res 27:499-504. https:// doi.org/10.1159/000261588

- Peker İ, ToramanAlkurt M, Altunkaynak B (2007) Film tomography compared with film and digital bitewing radiography for

proximal caries detection. Dentomaxillofacial Radiol 36:495-499. https://doi.org/10.1259/dmfr/13319800 - Schaefer G, Pitchika V, Litzenburger F, Hickel R, Kühnisch J (2018) Evaluation of occlusal caries detection and assessment by visual inspection, digital bitewing radiography and near-infrared light transillumination. Clin Oral Investig 22:2431-2438. https:// doi.org/10.1007/s00784-018-2512-0

- Wenzel A (2004) Bitewing and digital bitewing radiography for detection of caries lesions. J Dent Res 83:72-75. https://doi.org/ 10.1177/154405910408301s14

- Mahoor MH, Abdel-Mottaleb M (2005) Classification and numbering of teeth in dental bitewing images. Pattern Recognit 38:577-586. https://doi.org/10.1016/j.patcog.2004.08.012

- Reed BE, Polson AM (1984) Relationships between bitewing and periapical radiographs in assessing Crestal Alveolar Bone Levels. J Periodontol 55:22-27. https://doi.org/10.1902/jop.1984.55.1.22

- Shaheen E, Leite A, Alqahtani KA, Smolders A, Van Gerven A, Willems H, Jacobs R (2021) A novel deep learning system for multi-class tooth segmentation and classification on cone beam computed tomography. A validation study. J Dent 115:103865. https://doi.org/10.1016/j.jdent.2021.103865

- Zhang K, Wu J, Chen H, Lyu P (2018) An effective teeth recognition method using label tree with cascade network structure. Comput Med Imaging Graph 68:61-70. https://doi.org/10.1016/j. compmedimag.2018.07.001

- Mao Y-C, Chen T-Y, Chou H-S, Lin S-Y, Liu S-Y, Chen Y-A, Liu Y-L, Chen C-A, Huang Y-C, Chen S-L, Li C-W, Abu PAR, Chiang W-Y (2021) Caries and restoration detection using bitewing film based on transfer learning with CNNs. Sensors 21:4613. https:// doi.org/10.3390/s21134613

- Koppanyi Z, Iwaszczuk D, Zha B, Saul CJ, Toth CK, Yilmaz A (2019) Multimodal semantic segmentation: fusion of rgb and depth data in convolutional neural networks, in: multimodal scene underst., Elsevier, pp. 41-64. https://doi.org/10.1016/B978-0-12-817358-9.00009-3

- Subka S, Rodd H, Nugent Z, Deery C (2019) In vivo validity of proximal caries detection in primary teeth, with histological validation. Int J Paediatr Dent 29:429-438. https://doi.org/10.1111/ipd. 12478

- Baturalp Ayhan

baturalpayhan@kku.edu.tr

Enes Ayan

enesayan@kku.edu.tr

Yusuf Bayraktar

yusufbayraktar@kku.edu.tr